NBCU: Nielsen's Total Content Ratings Do 'Harm'

- by Wayne Friedman , December 15, 2016

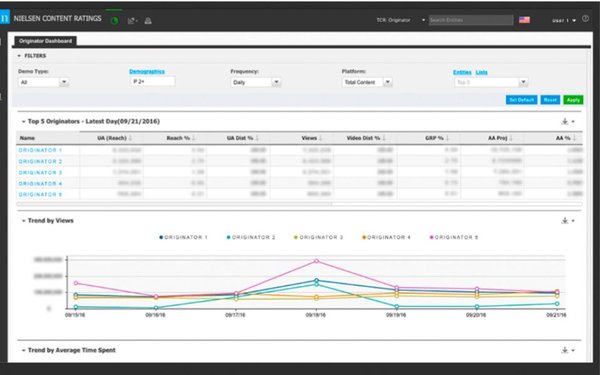

In a letter written by Linda Yaccarino, chairman of advertising sales and client partnerships at NBCUniversal, and obtained by Television News Daily, she notes a number of problems.

Total Content Ratings are needed, she says, but adds: “TCR in its current form fails to deliver on this objective and does much more harm than good. Bottom line, it’s not ready for release.”

Yaccarino then lists a number of problems: That TCR has “limited” participation from the industry, because many leading pay TV and digital operators have not yet incorporated TCR. Also, TCR uses “disparate digital data collection using a mix of panel census custom measurement (YouTube) that is not yet vetted.”

advertisement

advertisement

Other issues cited: VOD measurement comes from panels, not census level measurement; TCR provides incomplete OTT viewership. In addition, TCR has “no quality control measure for digital fraud, viewability, or completion rate.

NBCU also worries about using Facebook data for “deduplication of audiences that is also not tested.” It believes TCR fails to measure out-of-home viewing. Yaccarino also writes that TCR “lacks standardized reporting hierarchy across all measured entities."

She concludes: “TCR currently is an incomplete, inconsistent measurement 'ranker' wherein the reported output is fundamentally inaccurate.”

Responding to NBC, a Nielsen spokesman says: “Total Content Ratings is on schedule to syndicate data on March 1 [2017], at which time Nielsen clients will be able to use the data for external purposes.”

He adds that TCR is not in its final release form -- and should be considered that way. “Up until this time, the data being released to publishers and, subsequently, to agencies is for internal evaluation only.... We continue to enhance and refine our product with ongoing updates as we work with clients during this period of evaluation.

“Nielsen does not stipulate which measurements clients should enable, nor the order in which they should enable them. Total Audience Measurement is designed to provide media owners with utmost flexibility to enable the components based on their business priorities.”

Media executives have been hopeful that TCR would be ready for the new upfront advertising market in the summer 2017.

Brian Wieser, senior research analyst of Pivotal Research Group, adds: “In whatever form it takes, we think Nielsen will still be regarded as the industry’s standard measure of video consumption, and that any flaws that may exist in the product at present will eventually be remedied.”

Wieser adds: “Ultimately, the perspectives that matter most here are not those of the networks, but of agencies and marketers, and so far as we are aware, few of those stakeholders have had significant exposure to the TCR product.”

But NBCU is totally cool selling CNBC daytime off of survey numbers from a company we've never heard of.

One would think that the biggest complaint is that Nielsen is not measuring "viewing" at all, aside from in-home "linear TV", just device usage. This is also true for out-of-home measurements, even if the PPMs are incorporated into this goulash mix of methodologies. Does anyone really believe that you can equate device usage for all of these venues and "platforms" with in-home TV viewing on a one-for-one basis?

Nobody has cracked the nut....yet. Looks like no one is even close.

Ed, a possible is approach is to use "viewer per device" data from a large panel to extrapolate the census-based "device views" into "people viewing data" (being totally aware that people viewing data CAN and also WILL BE lower than census device data.)

There is a reliance in TCR on having everyone 'tag up' (or deploy the SDK) in order to get a total market picture, but it is early days yet. We're tagged to what I guess is around one-third of usage.

But, the greatest sin I see is to continue stacking streams up against average minute audience.

John, I believe that Nielsen should be testing a system for digital as well as out-of-home that replicates what it does with its peoplemeters, namely ask people to log in as "viewers"---using promts---whenever a signal or content is recognized and/or the content changes for a certain amount of time. That's not going to get you commercial "audiences"--as nothing will----but it will defuse much of the skepticism about people being counted as "reached" by content when they are neither watching or paying the slightest attention. Perhaps this is something that CRE might tackle?

Ed, I believe that the Nielsen online meter does ask for User Registration, and I believe it has an interval-based refresh (just like PeopleMeters do after a period of no change to the TV tuning). Given that content on the internet is changed more often (we 'surf' and content is in smaller bites) it may be counter-productive to have content-initiated audience prompts.

John, does Nielsen require digital its panel members to indicate that they are "watching" when the device is activated and what if someone else is using the device? As for channel changes, I realize that there will be quite a few per session but the need to affirm "viewing" seems paramount and, perhaps might be confined only to videos or TV shows/movies of a certain length---say 15 minutes or longer. Without some indicator that a given person is "watching" when content---though not ads---are on the screen, the result will be a significant overstatement of OOH audiences and who knows what for digital---but something higher than reality for sure.

Ed, yes the Nielsen meter (browser - I need to check the app) asks for user registration. Multiple people can be registered. I am unsure as to the 'reconfirmation interval'. I suspect that it is time based rather than content based due to the shorter durations for pages, video, audio etc. That is, it doesn't ask for registration every time there is a change in content (which would be a horrendous user experience). But then again, neither does the People Meter.

John, a few questions. If I understand you, the digital ( PC, tablet, smartphone ) user registration takes place when the device is turned on and a website is accessed, I assume. So if I register myself at that time, that's fine. However, the system probably assumes that I am then reached by all content---including ads--- so long as the activity lasts, even though it can involve a number of different sites and pages on said sites. I would regard this as a highly inflationary measurement if I were an advertiser.

Regarding the TV peoplemeter, it's my understanding that whenever a channel is changed and the set is tuned to the new channel for a certain amount of time---I don't know exactly what the time frame is-----then a prompt asks those present to indicate whether they are "watching" the newly accessed content---by which is meant the programming, not the ads. Once a person claims to be watching, unless he/she goes to the trouble of indicating otherwise--even if leaving the room----the system counts every second of all content---programs and ads---as "viewed" until the channel is changed once more.. Again, this is a highly inflationary and seller-friendly procedure, particularly when drilling down to "average minute commercial audiences".

Pretty close Ed. But in the data validation there is a lot of tidying up done.

I'll explain it as I understand it to be. I'll be getting some more detail soon.

The digital meter can vary. Some are 'browser meters', and some are 'operating system' meters. And of course there are 'app meters' for portable devices such as smartphones and tablets.

I'll try and explain the desktop/laptop meter. Here in Australia we use on OS meter. So, basically, once you turn the device on, the meter invokes (this harks back to the days of dial-up internet). The meter then captures all internet based activity basically using URL links (either invoked by a browser or an external link etc.) which is sent back to the research company (time-date-URL stamped).

When the meter starts up it asks for the user(s) to register - there must be at least one user registration. The research company has details of each user as part of the recruitment and installation process. The meter 'refreshes' the registration at intervals (unsure what they currently are). These generally consist of two types of intervals. The first is 'elapsed time' - for example every 30 minutes / one hour etc. The second is a type of 'latency interval' - for example, there has been no activity (e.g. no new searches) for 30 minutes. Each prompts for user registration. Overall, this can tend to undertsate audience as it is likely that someone could be watching a Youtube clip and say to another family member ... hey come look at this ... and most likely would not register them. As a media buyer I'd prefer a low-ball number to provide a buffer for my client.

But the biggest issue is how the meter works, and then how that data is validated. Take for example, my PC at the moment. This article is open in MS-Edge along with six other MediaPost tabs. I also have Firefox open with one tab of a local paper, and Chrome open with Mitch Oscar's newsletter in it. I know ... weird ... but it also happens in the real world.

If this PC was metered, all of these pages could be clocking up usgae and time. So, the important thing for the meter (and way more important than user registration - actve or passive registration) is to understand which of my three browsers has the screen focus, and then which of the tabs within that browser has the screen focus.

TBC

So let's assume that screen focus is captured by the meter, it can then either send just the 'focus data', or it can send all open tab data and let the validation procedure sort it out (I prefer the latter as you can always re-process if you find a stuff-up or change a parameter, or test new parameters). It is then in the validated data that you end up with a temporally linear file of screen-focused online activity.

Further, an OS meter can also monitor mouse and keyboard usage to determine whether a person is at the device - no activity is a reason to prompt for re-registration. That is, the browser tab(s) may have seen no activity but that doesn't mean there has been no device activity. The trick is, at what interval of no sign of device activity (mouse keyboard) do you prompt? Too short and you annoy panellists, too long and you can let invalid 'activity' through. The validation procedure of device activity = Yes but browser activity = No means you can 'remove' some, all or none of the browser duration.

In essence as long as you only allow a SINGLE browser tab to be credited at any one time, then the odds are (as long as you remove known periods of inactivity) it is not wildly inflationary, and given that multiple registrations are probably under-reported then it is probably line-ball.

TBC.

Regarding TV, there are several methods. The most intrusive is to re-register at any change event (watch your panel cancel if you do that!), or you can re-register after a period of stasis after a series of channel change events (i.e. something like 1-5 minutes after they have settled on a new channel), or re-register after a set period (say every hour - annoying), or re-register after a set period after the last registration (again, say an hour), or re-register after a set period of tuning stasis that had no viewer status changes (e.g. 65 minutes > a typical programme). I favour the 1-5 minutes after a channel change AND no channel or viewer change in the past 65 minutes to trigger re-registration.

Regarding that being inflationary around ad-breaks, some years ago I did an analysis of a weeks' viewing for every ad-break in Sydney on the commercial stations, and I could see that on a total viewing basis of dips between 4% (start and end of break) and 8% (middle of break), so I could deifinitely see that people were logging out and back in again. I'm not sure that everyone does, but many do. If you use the 8% figure (1-in-12) and work on 4 breaks in a one hour programme, that would mean 3 hours between loo breaks - which doesn't seem untoward. While 'everyone goes to the loo in ad breaks - very few go to the loo in every ad-break.

I hope this helps.

Thanks for you replies, John. They are very illuminating. Regarding out-of-home TV, where it is proposed to utilize PPMs to measure viewing, I still maintain that this is a potentially very inflationary measurement both of program viewing and especially for commercial viewing. The mere fact that the device can pick up an embedded audio signal---without any other indication that the person wearing or carrying the PPM device is "watching"---is not sufficient grounds to assume that viewing is taking place. While the amount of inflation will vary from location to location as well as for various types of program content, is is probably significant and advertisers should be aware of this. One bit of evidence on this subject comes from MRI, which asks people who claim to have watched TV shows in the past week, how attentive they were the last time they saw the show and where they were. While the OOH portion of the audience is only 2-3% on average, I have aggregated the findings across many programs to get a more stable result. The findings are sobering. A typical OOH viewer is about 45-50% less likely to claim he/she was fully attentive compared to an average in-home viewer.

Returning to our discussion, when it comes to TV, while peoplemeter panel members do indicate that they are watching program content at the outset of a channel selection, it is obvious that, aside from greater dial switching and sound muting during commercials, that some of the program viewers leave the room during an average commercial break, while many of those who claimed to be watching the show and remain present, are engaged in other activities or are paying no attention to the ads. Again, there is evidence on this subject, coming from many observational, albeit old, studies which we review in our annual, "TV Dimensions 2017". Aside from camera and "spy" studies, these include heat sensor findings by R.D. Percy which showed that 10-20% of the TV sets in use, played to empty rooms on an average minute basis. Also, if one studies the many TV ad recall findings, all using prompts or aids to stimulate the viewers' memory, you never get the highest recall scores much beyond the 50-60% level. If 90-95% of the "audience" is really "watching"an average commercial, why don't some of the more outstanding and memorable ads peak at recall levels of 75-90% in these studies---at least once in a while. I believe that the answer is that a significant portion of the assumed commercial audience is actually not present nor watching, hence the ad recall "ceiling" found in almost all studies of this nature.

Again, continuing, while it is convenient to rely on device usage in cross platform measurement, we must bear in mind recent ad recall studies---for matched sets of ads---that indicate much better results for in-home TV than digital venues. This, plus common sense, tells us that we can't equate ad-on-screen measurements with ad impact across the full spectrum of platforms. There will be major variations by type of campaign, demographic, program/editorial content, location, etc. I recognize that it is not feasible to require panel members to indicate or affirm their status as "viewers" every time a channel is sampled or a website is accessed, let alone those times when ads are on the screen. So I have no pat solutions to offer, except to warn advertisers that they are ill advised to treat all of these viewing opportunities as having equal value and pay CPMs based simply on the commercial minute ratings---as if they are what they are presumed to be. They aren't.

OOH TV is something I have wrestled with for years.

You are correct that in the main, mere presence within earshot is inflationary. If you are in a pub and a club and happen to walk near a TV while you are getting a drink that shouldn't qualify you as a viewer. There is a converse situation though - Superbowl (or in Australia our Grand Final days or Melbourne Cup). You can have hundreds of people crowded around numerous screens, the sound often barely audible over the crowd cheering - and PPM would understate that.

My gut feel is that half the attention level for an OOH viewer is about right - not that I put a lot of weight n such recall studies.

But the issue to me is, viewing choice. When you go into a public place there is no viewing choice so it is actually a very different viewing situation. Woe betide the person in the club who wants to switch the channel from the Superbowl to Jeopardy!

For that reason we define "TV" to be in a private viewing environment (for AC TVs). For example, our universe excludes nursing homes, hospitals, waiting rooms, pubs, clubs, offices, schools, universities etc. That definition lowballs the TV ratings. In essence I know that when I see a TV rating the real audience would be higher purely because of the universe definition being restricted.

As an example, our Melbourne Cup (first Tuesday every November at pm) is locally dubbed "The Race That Stops A Nation". This year it attracted a 2.8m audience (population around 24m). But that is only people who watched at home. Basically every office would have had multiple TVs on watching the race (around 3 mins 20 secs) with hardly anyone at their desks working. If the office didn't have a TV on, the staff would be down the local watering hole for a late lunch to watch the race - skeleton staff is the order of the day. There is also 100k people at the race (OK they locally declared it a public holiday as well). Consumer research estimates the actual viewing audience at around 5.6m - double the ratings.

So my take is that it simply is not commercially viable to deploy PPMs or the like for such special events, and that the audience for regular programming won't be highly engaged ... so why bother? Just feel comfortable that the actual audience would be higher than what you are paying for.

This is the elephant in the room.

First, having done some work with neuroscience studies, and having followed Robert Heath's work on Low Involvement Processing Theory for year, I must say I don't place great stead in claimed and recall based attention studies as (IMHO) always under-report.

I recall a UK-based recall study that used hypnotism (we're not allowed it use that here under AMSRS rules), which involved recall studies of a UK TV ad for beer that used a dancing bear. These days we'd say that it 'went viral' but it was hugely popular. The unprompted recall was very good. The prompted recall was very high. One respondent not only had no prompted recall but was hesitant about recall the brand (it was a leading beer). He was hypnotised and could do the bear-dance embarrassingly well! So one has to ask the question - how did he know the dance if he had no recall. Basically the conscious brain had blocked it out, but in his subconscious brain he had near perfect recall.

I know that was a one-off, but I do think we put a lot of stead in recall studies. I am also sure there are recall numbers which are higher than they should be (had a beauty where OOH street furniture was mistaken for a TV ad!).

But I also think that the client and the creative agency have a role to play here. A programme might have (say) an audience of 10m people, goes to a break, and during the ad-break the minute-by-minute audience might drop to (say) 8m, then revert to 10m when the ad-break ends. That is hardly the fault of the broadcaster. I also think that penalising them on their ad-rates because of poor ads is a bit rich (and no I have never worked for a broadcaster!)

And finally, in the cross-media studies I have been involved with for advertised brands, the efficacy of the medium and the ads is flushed out as part of the analysis.

We had a product when I was at OMD called Lighthouse back around the start of this century. It was a micro-economic advertising effectiveness modelling tool - bespoke to the advertiser and the brand. [One of the biggest learnings was that it was ill-advised to apply learnings and strategies across market segments and brands - despite global marketers thinking that the one strategy could apply across all their portfolio].

In essence the software was 'data agnostic', You could enter TV ratings, OOH traffic counts (i.e. not people based), cinema attendance, pricing data, weather, competitor data, social events (Xmas) ... whatever you thought MAY drive sales.

In essence the software would work along the lines of ... well I don't really know what 180 TV GRPs is, or a DPS in a newspaper or a 100 spot schedule with 5 live reads on the leading radio station is ... but I do know that when you use all three in combination with 1 million banner ads then sales go up between x% and y% every time. If you dropped the price 5% relative to the category average sales goes up between x1% and y1% ... or you could reduce the TV spend or the radio spend and still achieve x% and y% because of the price support.

The thing I found was the consistency of the measurement was imperative.

This is all good for advertisers who do deep-dive longitudinal analyses because they can use those learnings in their strategic planning.

But (back to your point) it is no real use for clients who don't.

So how did we get around this? By applying 'effectiveness discount factors' to each medium's 'people metric'. Yes, purely subjective (based on their recall tracking surveys if they did them) but better than not taking them into account. So we had guidelines for a brand that might be along the lines of ... you need 2 radio listeners to equal 1 TV viewer, but 5 OOH lookers to equal 1 TV viewer.

The ideal is to have all this measure an quantified at a category or brand effectiveness level - but that is not likely in my lifetime!

It is often attributed to Einstein that he said ... 'not everything that can be counted counts, and not everything that counts can be counted'. If he didn't say it, he should have!