Commentary

Data In, Garbage Out: How To Avoid Turning Your Targets Into Trash

- by Joe Mandese @mp_joemandese, May 18, 2020

One of the unintended consequences of the ad industry’s shift from audience measurement to audience data for targeting media has been a complete and utter breakdown in standardization.

As weak as audience measurement proxies like demographics and GRPs have been for understanding who consumers actually are, they have at least served as common currency that people on the supply and demand side could plug their own values and attributes into.

But the rampant availability of new sources of audience data -- and of data management platforms to organize them -- has created a kind of “Wild West” for audience targeting that requires buyers and sellers of media to think not just about the outputs, but the inputs that led to those conclusions.

Making matters worse, many of the biggest and best-in-class of these data platforms -- companies like Acxiom, Epsilon, and Merkle -- are now owned by the big agency holding companies, raising profound questions about the “neutrality” of the data, requiring clients to think not just about the representativeness of the data, but other agendas too.

Last week, the Association of National Advertisers (ANA) issued a “Buyer’s Guide” to help big advertisers understand how to vet sources of audience data, and as good a starting point as it is, it’s just the beginning of establishing best practices, and industry norms.

What the ad industry really needs is some new standards. The last time that kind of Wild West existed for the ad industry -- the TV ratings scandals of the late 1950s and early 1960s -- it led to Congressional hearings and a Department of Justice review that gave birth to a new self-regulatory authority -- the Media Rating Council -- that helped maintain audience measurement standards for half a century.

The MRC reportedly is beginning to look at what kind of role it can play to help marketers create some standards for vetting audience data, but in the meantime, some well-regarded industry entrepreneurs have stepped forward to fill the gap.

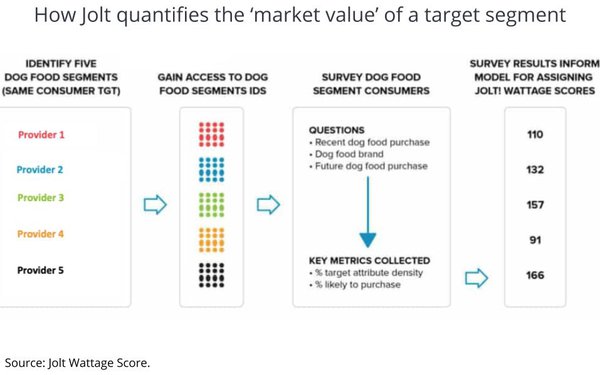

Over the past year, three of the ad industry’s leading research authorities -- Sequent Partners, Rubinson Partners, and Pre-Meditated Media have come together to form a new ad hoc consultancy to do just that. The consortium, dubbed Jolt!, utilizes tried-and-true scientific research methods to evaluate and benchmark the accuracy and effectiveness of a source of audience data to determine what the explicit ROI is of using to buy media.

In many cases, the consortium takes a “sub-sample” of the audience data a client intended to use, and conducts its own research, recontacting users in the sample to validate their composition and attributes.

To date, the Jolt! researchers have found profound variances between the projected target audience delivery and the actual audience delivered.

For hard-to-measure segments, including a multicultural segment like Hispanics, the discrepancies could range from underdelivering 25% to 70% of a brand’s target audience impressions, says Sequent Partners’ Alice Sylvester.

“That’s one-third to two-thirds of your impressions,” she says, adding, that the impact on the CPMs -- or cost-per-thousands -- of buying media based on those estimates could effectively be 50% to 300% higher than what a marketer or agency thought they were paying.

That’s one of the main reasons Jolt! has been working with the Alliance for Inclusive and Multicultural Marketing (AIMM), a division of the ANA focused on ensuring the representation of multicultural segments in advertising and media.

It’s hard, roll-up-your-sleeves kind of work, but the consortium is used to those kinds of projects. For the past several years, Sequent Partners has been evaluating a similar Wild West of attribution providers and holds regular summits and publishes directories to help the industry understand their approaches and claims.

So it makes sense that they would make the next big leap directly into evaluating the data itself.

It may be an old metaphor, but I believe the early computer programming concept of GIGO -- or garbage in, garbage out -- pertains to the new world of audience data targeting. Because if Jolt! has proven anything, it has demonstrated, when it comes to data, it’s time to take out the trash.

Joe, there were no TV rating "scandals" in the late 1950s and early 1960s. What happened was a general negative reaction against the TV networks which had taken control of their prime time content and were flooding their schecules with Hollywood manufactured westerns, private eye , police and action-adventure series featuring excessive doses of violence---hence Newton Minow's famous description---which covered all sorts of TV content, including the ads---of TV as a "vast wasteland". Needless to say, Nielsen got blamed for aiding and abetting this violence-prone shift in TV programming on the assumption that it's ratings must be wrong---based on small and unreliable samples don't you know---and these inaccurate findings must be misleading the networkls into thinking that viewers were really watching such shows in massive numbers. So Congress started an investigation that called Nielsen on the carpet as well as some other researchers and huffed and puffed trying to uncover proof that the ratings were not providing a true picture of people's viewing behavior. But nothing of any consequence was found.

As it happened, this is when I met Erwin Ephron, who, at the time was working in Nielsen's PR department. We commuted on the NY Central to and from Manhattan to Riverdale and spent much time discussing the Congressional investigation. I took the trouble to check the local market ratings put out by The American Research Bureau---later known as Arbitron---as well as its once-a -month national diary findings to see how they compared with Nielsen's national ratings and quess what---they produced nearly identical findings on virtually every show in all dayparts. In other words, while there were cases of sloppy practices and some tightening up was mandated, there was no "scandal".

The final report from the House commitee filled several very thick volumes and I read it all. The networks weren't being misled by faulty research by Nielsen. People by the millions actually liked shows like "Gunsmoke", "The Untouchables", "Wyatt Earp", "77 Sunset Strip", "M Squad", etc. The problem was that there were just too many of them, including many "me too" copies. And once the glut became too large, Nielsen correctly showed that many of these shows were not drawing high ratings---and they were cancelled. It's all in my book, "TV Now and Then", Media Dynamics Inc, 2015.

@Ed Papazian:

https://en.wikipedia.org/wiki/1950s_quiz_show_scandals

http://mediaratingcouncil.org/History.htm

Joe, your piece mentions "TV rating scandals" which is not the same thing as the rigged quiz show scandals of the late 1950s which were real enough---also covered in my book.

@Ed Papazian:

Show producers, who had legally rigged the games to increase ratings but did not want to implicate themselves, the show sponsors or the networks they worked for in doing so, categorically denied the allegations. After the nine-month grand jury, no indictments were handed down and the judge sealed the grand jury report in Summer 1959.[4] The 86th United States Congress, by then in its first session, soon responded; in October 1959, the House Subcommittee on Legislative Oversight, under Representative Oren Harris' chairmanship, began to hold hearings investigating the scandal. Stempel, Snodgrass and Hilgemeier all testified.[12] Van Doren, initially reluctant, finally agreed to testify also. The gravity of the scandal was confirmed on November 2, 1959 when Van Doren said to the Committee in a nationally televised session that, "I was involved, deeply involved, in a deception. The fact that I, too, was very much deceived cannot keep me from being the principal victim of that deception, because I was its principal symbol."[13]

Joe, I happen to be very familiar with the great rigged quiz show scandal as BBDO had the mega hit, "$64,000 Question" and its spin-off, ""The $64,000 Challenge", at the time and I ---as a very young fellow--- was interviewed by the House investigators about our shows. It was an interesting period, however, it was not nearly as disruptive for most advertisers, nor TV in general---as what's happening now. All of the advertisers who sponsored these shows claimed that they had no idea what was going on---and so did the agencies who looked after their client's programs. So the producers took the blame. I happen to be aware---by informed hearsay--- of one deal whereby the sponsor paid the producer an amount---over the base level---whenever the ratings rose----but, of course, the sponsor had no idea that the producer might be rigging things to secure higher ratings.If you believe that then you believe that elephant's might be able to fly by flapping their big ears. The 1994 movie, Quiz Show" is a surprisingly accurate depiction of the rigged quiz show scandal and how it played out.