New YouTube Metric Shows Percentage Of Views From Content That Violates Policies

- by Karlene Lukovitz @KLmarketdaily, April 7, 2021

In its latest effort to reassure brand-safety-focused advertisers, the public and lawmakers, YouTube will begin publicly releasing a metric that quantifies what percentage of views come from content that violates its guidelines.

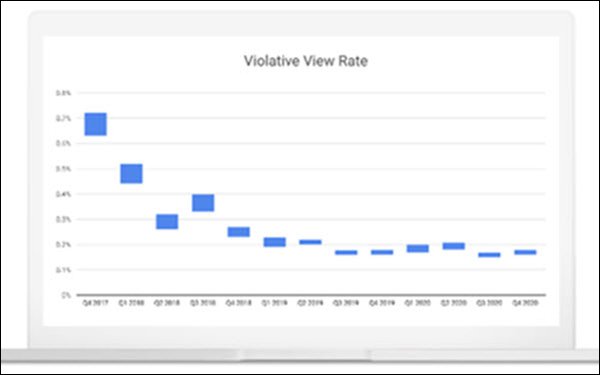

The metric, called the Violative View Rate (VVR), has actually been tracked, and used as the company’s primary “responsibility work” metric, since 2017, YouTube Director, Trust and Safety Jennifer O’Connor said in a blog post.

The most recent VVR is at 0.16 to 0.18%, which means that out of every 10,000 views on YouTube, 16 to 18 come from content that breaks YouTube’s rules prior to being taken down.

Going forward, the VVR will be updated on a quarterly basis in YouTube’s Community Guidelines Enforcement Report.

Why wait for several years to begin releasing this metric?

There is of course unprecedented pressure from lawmakers and the public now over the effects of social platforms’ content and content monitoring practices — as well as calls for breaking up the tech giants or greatly reducing their power.

In addition, the current VVR percentage “is down by over 70% when compared to the same quarter of 2017, in large part thanks to our investments in machine learning,” according to O’Connor.

And while YouTube cautions that the VVR will fluctuate up and down over time — for instance, rising temporarily after a policy update, as the system catches content newly classified as “violative” — the company seems confident there won’t be any major surprises.

The VVR is calculated by taking a sample of YouTube videos, which are assessed by its content reviewers to determine which do and do not violate the platform’s policies.

“We believe the VVR is the best way for us to understand how harmful content impacts viewers, and to identify where we need to make improvements,” says O’Connor, who notes that video sampling provides a more comprehensive view of violative content that YouTube might not be catching with its systems.

“Other metrics, like the turnaround time to remove a violative video, are important. But they don't fully capture the actual impact of violative content on the viewer. For example, compare a violative video that got 100 views but stayed on our platform for more than 24 hours with content that reached thousands of views in the first few hours before removal. Which ultimately has more impact?”

Over the years, YouTube has had numerous run-ins with advertisers and politicians over content run amok. Many advertisers left YouTube in 2017, but most had returned by early 2019 — when revelations about pedophiles commenting on photos of children triggered another major boycott. More recently, the platform, like others, has struggled with false, violence-inspiring and anti-vaccine content—all of which also keeps advertisers on edge.

YouTube says that since 2018, when it introduced the guidelines enforcement report, it has removed over 83 million videos and 7 billion comments for violating its guidelines. It also reports that machine learning tech has allowed it to detect 94% of all violative content via automated flagging, with 75% removed before 10 views have accumulated.

The platform is not, however, planning to disclose the actual numbers of views of a violative videos before their removal.

Despite its catch-and-kill progress, total views of problematic videos “remain an eye-popping figure because the platform is so big,” sums up The New York Times.

Nor does YouTube -- which, at least for now, calls all the shots about content policies and which videos violate those -- plan to have the violative content/removal data independently audited, although O’Connor told the Times that she “wouldn’t take that off the table yet.”