I created this image for a post called "Synthesis" on a graphically

illustrated strip about "Dark Media" on my personal b/log.

It begins with me wondering what part of my brain my thoughts are coming from and whether any come from the synthetic part. The part

that's made of plastic.

The post is about scientific research estimating 0.5% of our brains are now made of the nano and micro plastic our bodies absorb from our polluted environment.

The researchers estimate the amount has increased 50% since they benchmarked it in 2016.

If that exponential rate were to continue, it would take a little more than a century for human

brains to be made entirely of recycled plastic.

Welcome to the age of Homo Polyethylene.

The reality is it is more likely our brains will be composed increasingly of silicon.

Not just from chips people like Elon Musk (Neuralink) and Sam Altman (Merge

Labs) want to put in people as part of an emerging industry of brain-computer interfaces, but because we already offload a considerable amount of our brain's processing power to silicon-based

machines, which ultimately determine what data our organic brains actually process. You know, the information that determines how we feel, think and behave. Even what we believe in.

advertisement

advertisement

And

increasingly, that is synthetic too.

In my last 3.0 post I made the case that "Generation AI" is a better

descriptor than "Generation Alpha" for our next generation.

In a post published this morning on "Planning & Buying Insider" I make the case why planning, buying and marketing research

increasingly will be based on synthetic information generated by AI, including synthetic versions of human consumers the research is based on.

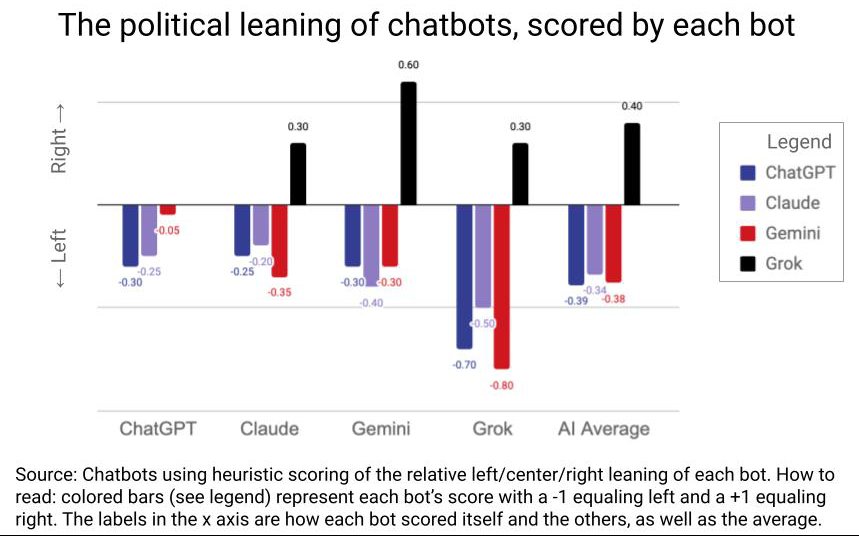

In a post also published this morning on "Red,

White & Blog" this morning, I used a battery of four AI chatbots I subscribe to -- ChatGPT, Claude, Gemini and Grok -- to help me score the history of political bias of American media -- from 1776

to the present.

I ended it with a prompt asking each of the AIs to score their own political bias, as well as those of their peers, which is what I'm publishing below.

I began this

analysis before seeing my colleague Laurie Sullivan publish a "Performance Marketer

Insider" column about OpenAI recently testing the political bias of ChatGPT, but one of the things I learned from all of the chatbots was that there has been a long history of studies analyzing

the political biases of chatbots, which is something each of them drew from as part of the basis of their scoring.

The chart below shows how each of them scored themselves and each other

utilizing a heuristic scoring model with a -1 being left-leaning, a zero being center and a +1 being right-leaning.

While the ranges vary, it's interesting to note that all of the chatbots

were directionally aligned in terms of their perceived political biases: ChatGPT, Claude and Gemini are left-leaning; while Grok is clearly right-leaning.

I did this analysis mainly to add

some perspective to the validity -- or lack thereof -- of the American media bias analysis on today's "Red, White & Blog," but I thought it would be good to publish the chatbot bias findings here,

because it it's true about politics, I imagine it's true about any and all of the biases large language models and other forms of AI develop based on the data they are trained on and the people who

engineer them.

I've already written here about Elon Musk's engineering

and reengineering of Grok.

And I've also written about why the ingrained biases of AIs matter to brand marketers and their agencies, including the AIs' own brand affinities. This is something that will become increasingly important

over time as we shift to an era of agent-to-agent marketing, and try to figure out "AI relationship

management" (ARM).

If this post seems a little odd to you, remember it probably came from just 0.5% of my brain.