In partnership with top third-party fact-checking organizations, Facebook is launching a full-frontal attack on so-called “fake news.”

In partnership with top third-party fact-checking organizations, Facebook is launching a full-frontal attack on so-called “fake news.”

Soon, if these partner organizations

-- each of which is a signatory of Poynter’s International Fact Checking Code of Principles -- identify a

story circulating on Facebook as phony, it will be labeled as disputed.

Such stories will also be accompanied by links to corresponding articles, which clearly explain why they have been

flagged.

Disputed stories will also appear lower in Facebook’s News Feed rankings and will lose their right to be featured in an ad or be promoted by a publisher.

Analysts on

Thursday said Facebook has the right idea by going after publishers' ad revenue.

“The incentive for most fake news creators is financial rather than philosophical,” said Susan

Bidel, an analyst at Forrester Research. “If there is no financial gain to be had from creating and circulating fake news, it will dry up and the digital ecosystem will be improved.”

Meanwhile, “It will still be possible to share these stories, but you will see a warning that the story has been disputed as you share,” Adam Mosseri, VP of News Feed at Facebook, notes

in a new blog post.

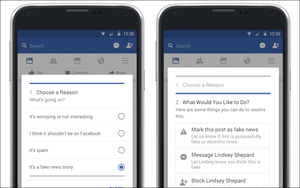

Facebook will rely on its community of users -- along with other “signals” -- to send suspect stories to fact-checking organizations.

Among

other “signals,” the social giant believes it can identify fake news by tracking certain sharing and readership patterns.

“We’ve found that if reading an article

makes people significantly less likely to share it, that may be a sign that a story has misled people in some way,” according to Mosseri.

As such, “we’re going to test

incorporating this signal into ranking, specifically for articles that are outliers, where people who read the article are significantly less likely to share it,” Mosseri explained.

As

Bidel suggested, Mosseri and his team have found that much of fake news is financially motivated.

“Spammers make money by masquerading as well-known news organizations ... They post

hoaxes that get people to visit their sites, which are often mostly ads,” Mosseri notes.

Therefore, Facebook is taking several actions to reduce this financial incentive.

On the

buy side, the company has eliminated the ability to spoof domains, which is expected to reduce the prevalence of sites that pretend to be real publications.

On the publisher side, Mosseri and

his team are currently analyzing publisher sites to detect where policy enforcement actions might be necessary.

Facing mounting criticism for failing to curb phony news, Facebook CEO Mark

Zuckerberg recently called for patience

and understanding. “We take misinformation seriously,” Zuckerberg asserted in a blog post. “We've made significant progress, but there is more work to be done.”

The

young mogul said Facebook has historically relied on its community of users to point out inaccurate news content, but admitted that the task has become increasingly “complex, both technically

and philosophically.”

“We believe in giving people a voice, which means erring on the side of letting people share what they want whenever possible,” Zuckerberg explained.

“We need to be careful not to discourage sharing of opinions or to mistakenly restrict accurate content.

“We do not want to be arbiters of truth ourselves, but instead rely on our

community and trusted third parties.” Further deflecting blame, Zuckerberg insisted the percentage of misinformation on Facebook remains “relatively small.”

Moving forward,

areas of focus would include stronger detection measures, including better classification of “misinformation,” he said.

Following the presidential election, last month, Zuckerberg

dismissed criticism that Facebook contributed to Donald Trump's victory.

“Personally, I think the idea that fake news on Facebook, which is a very small amount of the content, influenced the election in any way, is a pretty crazy idea.”