Giving users more say over their News

Feeds, Facebook is finally testing a “downvote” button in conversation threads.

With the test, the social giant is only letting users vote down comments in conversations that

accompany posts -- not posts themselves. After down voting a comment, users will be prompted to tell Facebook whether they found it to be “offensive,” “misleading,” or

“off topic.”

Facebook has long resisted such a feature, fearing it would facilitate a negative and unkind atmosphere.

Amid calls for a

“dislike” button in 2014, CEO Mark Zuckerberg said: “I don’t think that’s socially very valuable or good for the community to help people share the important moments in

their lives.” Therefore, “We’re not going to build that.”

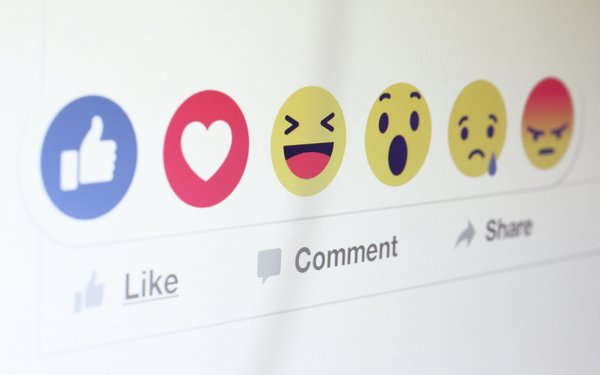

Since then, Facebook has sought to give users new ways to express themselves. This has included

complimenting its famous “like” button with options for users to express love, laughter, amazement, sadness and anger regarding particular posts.

The comment-voting system now

being tested is common on other online platforms — Reddit in particular.

Reacting to Facebook’s test on Friday, Reddit cofounder Alexis Ohanion tweeted: “Sincerest form

of flattery! Wish I’d trademarked it and ‘upvote’ when came up with it.”

Facebook is positioning the test as part of a broader effort to curb inappropriate content on

its platform.

“We are not testing a dislike button," a company spokesperson insisted on Friday. "We are exploring a feature for people to give us feedback

about comments on public page posts ... This is running for a small set of people in the U.S. only."

At the beginning of the year, Zuckerberg pledged to cleanse his social network of

trolls, purveyors of false and misleading information, and other bad actors.

He accepted some responsibility for forces dividing people in the U.S. and worldwide. “The world feels anxious

and divided, and Facebook has a lot of work to do,” he acknowledged.

Along with slowing user growth, Facebook’s inability to curb hate speech and deceptive content represents the

biggest existential threat to the company. Among other repercussions, German officials recently vowed to fine Facebook and other tech giants more than $60 million if they can’t keep their

networks clear of “obviously illegal” content, hate speech and fake news.

Zuckerberg is also engaged in a wider effort to foster more authentic engagement on Facebook, including

tasking teams with reviewing and categorized hundreds of thousands of posts, which guide a machine-learning model that detects different types of engagement bait.

Facebook also recently agreed

to implement stricter demotions for Pages that systematically and repeatedly use engagement bait to artificially gain reach in News Feed.