Content-moderation efforts by Twitter and Facebook --

including Facebook's fact-checking of posts about Vice President Kamala Harris and Twitter's suspension of a Wisconsin candidate for U.S. Congress -- didn't violate federal election law, according to

the Federal Election Commission.

The agency said last week it had unanimously rejected a series of complaints alleging that Twitter's and Facebook's content decisions between 2018 and the 2020

election amounted to prohibited “in-kind” campaign contributions.

Five of the FEC's commissioners said they believed the companies' moves were motivated by commercial

considerations, including the goal of retaining advertisers, as opposed to an attempt to influence an election.

A sixth commissioner, Sean Cooksey, said he believes the companies are media

organizations, and their content decisions are therefore exempt from campaign finance rules.

advertisement

advertisement

“Twitter is a publisher with a First Amendment right to control the content on its

platform and to favor or disfavor certain speech and speakers,” Cooksey wrote in one of the decisions. “Its conduct therefore falls under the FEC’s media exemption, doesn’t

qualify as an expenditure or contribution, and doesn’t violate campaign-finance law.”

One of the rejected complaints was brought by the campaign for Rep. Matt Gaetz (R-Florida),

which said he was “shadow-banned” by Twitter in 2018, when the platform's autocomplete feature didn't populate a drop-down box with his name after users began typing it in.

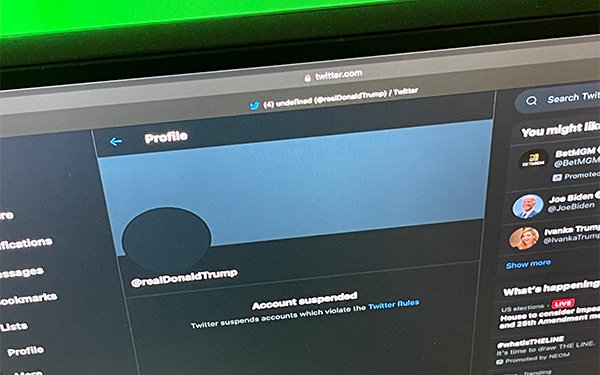

Twitter

denied intentionally limiting Gaetz's visibility. Instead, the company said there was a brief “technical and nonpartisan” glitch in its autocomplete feature in 2018.

Another

complainant, self-described “pro-White” candidate Paul Nehlen, who unsuccessfully sought the Republican nomination for U.S. Congress in Wisconsin, claimed Twitter violated campaign finance

laws when it banned him from the platform.

Twitter countered that its content moves were motivated by a desire to

retain advertisers.

“Twitter, like almost every other business, must respond to marketplace demands. To that end, major online advertisers ... have insisted that 'tech companies . . . do

more to minimize divisive content on their platforms,'” the company wrote in a letter submitted to the agency.

A third complainant said he was suspended from Twitter for

“suspicious activity” after making multiple posts supporting Democratic candidates.

Twitter said its automated system flagged the posts as potential spam, and suspended the account

for that reason.

The FEC's counsel recommended that the complaints be dismissed, writing that Twitter's actions “appear to reflect commercial considerations, rather than an effort to

influence a federal election.”

The agency also dismissed separate complaints against Twitter stemming from its move to limit the viral spread of a disputed New York Post article about

Biden's son, Hunter.

The day the story appeared, Twitter restricted users' ability to share the Post's article, citing a policy against distribution of “hacked” materials.

The web company's move

prompted a backlash, and Twitter quickly restored people's ability to spread the article, while also announcing it would no longer remove “hacked” content, unless shared directly by

the “hackers."

Twitter told the FEC that the decision to block “potentially hacked content” was made in order “to protect the safety, integrity, and commercial

viability of its social media platform.”

The complaints against Facebook alleged that it restricted the spread of the disputed New York Post article, and also that it

fact-checked or limited the spread of posts that were critical of the Biden campaign, including posts by people who criticized Harris,

For instance, one of the complaints alleged that

Facebook suppressed distribution of a article titled “Kamala Harris Doesn’t Think You Have The Right To Own A Gun” by flagging it as false, and redirecting users to a page by Agence

France-Presse, which had fact-checked the article.

Facebook countered that it “has strong business reasons for

seeking to minimize misinformation on its platform.”

The company added that misinformation is bad for the community, as well as its business.

“The explicit goal of

Facebook’s third-party fact-checking program ... is to prevent the spread of viral misinformation and help users better understand what they see online,” the company wrote in a letter to

the FEC.

The FEC accepted its general counsel's recommendation to reject those complaints against Facebook.

“The available information indicates that the actions taken by

Facebook to fact-check and reduce the distribution of potential misinformation appear to reflect commercial considerations, rather than an effort to influence a federal election," the general counsel

said in its recommendation.