Thanks to gravity, AR gains a cool new

edge

Thanks to gravity, AR gains a cool new

edge

Mobile augmented reality. You’ve undoubtedly heard the hype by now. You’ve probably even taken one of the numerous marketing applications for a spin and idly tweeted or

Facebooked the results, immediately pushing to the entirety of your social network a photo of a prominent celebrity or cartoon character’s face superimposed over your own. Or a photo of you

standing next to a 3-D model. Maybe a screenshot of a 3-D model you “discovered” or “unlocked” with the camera of your smartphone.

This sort of experience is both a

blessing and a curse to the industry. Though often entertaining, the “wow” factor of AR as a visual medium is only the beginning of designing a marketable and successful experience (just

as it is in visual advertising). Lately it seems as though some app developers exploit and optimize this aspect of the technology without creating features that bring the experience beyond just visual

engagement.

As a result, we often see AR limited in interpretation as a purely optical technology. It is not uncommon for a journalist to describe AR in terms of “Terminator

vision” and head-mounted or heads-up display interfaces while writing of an AR application that allows the user to either see floating navigational indicators — information that has no

direct referent in reality, merely GPS data converted after-the-fact — or a static 3-D model of a bunny rabbit … attached to an image of a 2-D drawing of a bunny rabbit.

Portraying AR as a chiefly visual technology isn’t wrong, exactly. It originally came from computer-vision and optical-recognition research. And there are untold uses for this kind of

interface. GPS and navigational experiences are still welcome additions to the AR ecosystem, but they lack the real-world interaction of computer vision-based experiences. Even those, as in the case

of the “bunny rabbit” example, can leave something to be desired.

Mobile augmented-reality experiences now dominate the market, compared to Web and offline kiosk installations.

And with the advent of sophisticated mobile devices with complex on-board sensors and cameras, AR is starting to move beyond the common point-and-view interfaces to merge data from all of these

elements in order to arrive at immersive and intuitive augmented experiences.

Look at the recent developments from San Francisco-based start-up QuestVisual, which pioneered mobile

Optical Character Recognition (OCR) with their famous iPhone app, Word Lens, which instantly translates foreign text to your native tongue just by waving the camera over it. Throw in a dash of data

from the GPS and you could have an app that naturally translates visual text based on the location of the user.

Sensor fusion will be the next great frontier in mobile AR. It will provide

a more robust and natural way to access and analyze real-world data using location, movement, position — and gravity — before the camera even comes into play. And, yes, sensor fusion

sounds as if it’s some sort of sci-fi amalgamation of components — barometric compasses and accelerometric thermometers come to mind. But it’s more about merging information.

It’s the combination of data coming from two or more sensors on a device in real-time to create a new set of information and to arrive at a novel experience, based on that merging of

that data.

It sounds futuristic, but these days the average smartphone comes with an impressive array of internal gadgets: a gyroscope for orientation; an accelerometer to detect movement;

a digital compass. The iPhone has both ambient light and proximity sensors. An Android device carries a barometer.

Let’s say your phone has sensors that can calculate orientation,

position, location and barometric pressure. With the input of geographical, urban, weather and astronomical data, you could potentially calculate the exact position and trajectory of the sun based on

the user’s location. It’s not difficult to envision a mobile application that can direct people to locations where they can get the best view of a beautiful sunset, or the best spot in the

city to get sustained sunlight over a specific period of time. An app, of course, sponsored by a sunglass company.

It won’t be pretty, at least not at first. Sensors are not unlike

colors: Haphazardly mix them together and your result will look … unsightly. The idea is to discover combinations that will enhance the overall quality of an experience. In almost all cases,

the fusing of two or more sensors needs to be relevant to the device’s camera in order to improve an augmented experience. So this was the question for the Metaio R&D department: How do we

combine sensors in a way that enhances optical recognition and image tracking? In other words, how could we make the phone’s camera smarter? Though the methods were complex, the answer was

simple. We align everything according to one of the most basic principles of our day-to-day lives: gravity.

Through algorithms and the fusion of sensors (movement, orientation) with

optical data from the camera, we were able to teach the smartphone how to see with respect to up and down. Imagine trying to do anything in your daily routine without a sense of up and down — or

even better, imagine if the concept and effects of gravity were completely alien to you. You’d be floating around in near chaos trying to make sense of your environment, much like the camera on

your phone before we taught it to understand its surroundings. We taught it orientation.

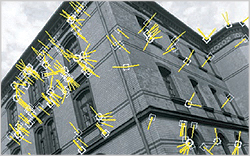

With our software, mobile cameras no longer search for independent points of contrast when trying

to recognize images. Aligning the camera with gravity supplies a constant framework for processing images. Take the facade of a brick building: Every window on that building has an almost identical

set of contrasting features (see Fig. 1). With Gravity-Aligned Feature Descriptors (GAFD), the camera automatically orients each window with respect to up and down. The inertial sensors work to give

the camera an understanding of what it sees. With a standardized orientation, overlaying the side of buildings with digital information becomes very straightforward.

The implications

border on science fiction. Looking at an office building from the street level and immediately determining what windows correspond to different commercial real estate listings: selecting the

highlighted window yields a 360° navigable photograph, pricing and lease information, and direct access to the realtor. An urban scene marred by graffiti is restored to its original state for

photographs. Virtual, interactive murals that span city blocks. Every poster or billing for a film or concert yields a corresponding visual key and information on how to attend. Furnish your home or

office without tape measures or blueprints. And that’s just the beginning.

GAFD works on a smaller scale as well. Recognition comes faster; tracking is smoother — and it

sticks. That virtual bunny rabbit you just discovered on the cover of a magazine isn’t going to jitter uncontrollably (unless it’s supposed to). Virtual objects and animations will be more

attached to their anchors — whether they’re a building, a newspaper, a product package or a business card — and they’ll behave more realistically. Candle flames on virtual

birthday cakes will always point to the sky, just like in real life, no matter how you tilt the reference image. This technology is so subtle that you may have even believed that AR already worked

like this.

It didn’t. But it does now.

In the not-so-distant future, we will be able to access astounding amounts of information from our surroundings, just with our

smartphones. There may come a day when it suddenly hits you how intuitive the process of interacting with your surroundings has become. And you’ll pause, marveling that it all began with a

phone’s camera and a gyroscope. Welcome to the augmented world, where the digital is as natural as gravity.

advertisement

advertisement