Marking its latest attempt to curb COVID-related

misinformation, Facebook will soon begin alerting users that engage with such content.

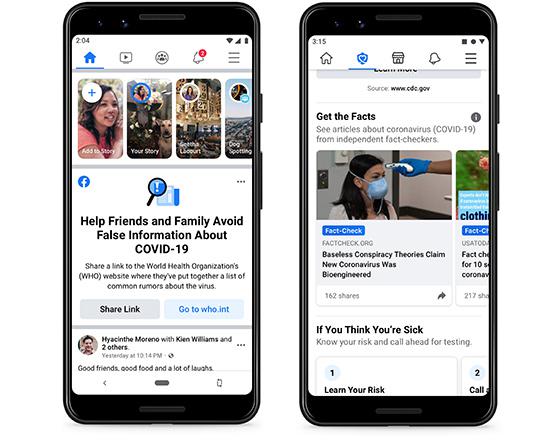

“We’re going to start showing messages in News Feed to people who have liked, reacted or

commented on harmful misinformation about COVID-19,” Guy Rosen, vice president, integrity at Facebook, announced on Thursday.

Set to take effect over the next few weeks, the messages

will direct users to more accurate information, Rosen said.

For Facebook, the move is part of a much larger effort to slow the spread of false and misleading information, which has more

recently focused on COVID-19.

For example, the social giant recently added a new section to its COVID-19 Information Center called “Get the Facts,” which includes fact-checked

articles from partners.

advertisement

advertisement

Since the crisis began, Rosen said Facebook has directed over 2 billion users to resources from the World Health Organization and other health authorities through its

information center.

The company now relies on approximately 60 fact-checking organizations to review and rate the infinite stream of content that flows through its platform.

Once a

piece of content is rated false, Facebook reduces its distribution and labels it with a warning to users. Based on a single fact-check, Facebook also uses similar detection methods to identify

duplicates of false stories.

In March, Facebook’s flagship platform displayed warnings on roughly 40 million posts related to COVID-19 on Facebook, which were based on approximately

4,000 articles flagged by fact-checking partners.

Relatively speaking, users have been responsive to such warnings. After seeing them, users chose not to view labeled content 95% of the time,

according to Rosen.

Yet, as conspiracy theories continue to flourish, analysts

have waxed skeptical about social networks’ ability to police their platforms.

“Given the novelty of the disease and the fast-changing nature of related news, it’s safe to

assume that a large portion [of related content] is inaccurate or outdated,” Jasmine Enberg, a senior analyst of global trends and social media at eMarketer, recently said.

Still,

Facebook’s misinformation-fighting efforts are proving to be more effective than those of other networks. On Twitter, for example, researchers recently found

59% of posts within their sample of false content went undetected by the platform.

By contrast, 24% of posts within the researchers' sample of false content went undetected by

Facebook.