Citing privacy

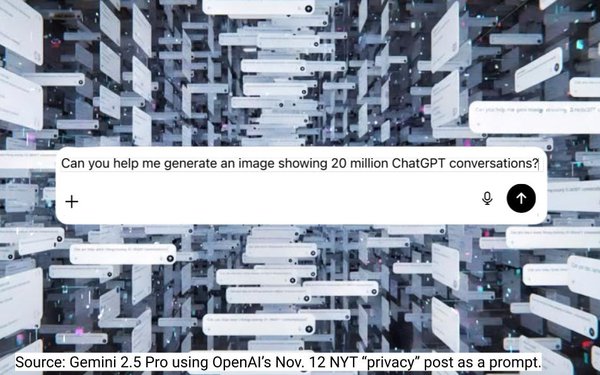

concerns, artificial intelligence company OpenAI is asking a federal judge to reconsider an order requiring it to provide The New York Times and other newspapers with logs of 20 million

conversations between users and ChatGPT.

"This data belongs to ChatGPT users all over the world -- families, students, teachers, government officials, financial analysts,

programmers, lawyers, doctors, therapists, and even journalists -- whose private thoughts and confidential business information may now be exposed," OpenAI's lawyers argue in a letter sent Wednesday to U.S. District Court Magistrate Judge Ona Wang in New York.

"To be clear: anyone

in the world who has used ChatGPT in the past three years must now face the possibility that their personal conversations will be handed over to The Times to sift through at will in a

speculative fishing expedition," the company's lawyers add.

advertisement

advertisement

OpenAI is asking Wang to conduct further proceedings regarding the material, and to immediately halt the order to disclose the data

on the grounds that turning over the information "will irreversibly harm" users' privacy.

The news organizations countered in a letter filed with Wang late Wednesday that OpenAI is simply trying to avoid turning over

evidence.

"OpenAI’s ill-considered request is not about protecting user privacy. That concern is simply a pretext for yet another attempt to evade production of

evidence," the organizations argue.

Wang issued the order Friday at the request of news organizations that are suing OpenAI over alleged copyright infringement. The

Times and others claim OpenAI wrongly trains its large language models on news articles, and that it displays verbatim excerpts from articles in response to queries by users.

Lawyers for the news organizations told Wang they need the chat logs in order to analyze ChatGPT's outputs, arguing in an October 30 letter to Wang that the conversations will provide

direct evidence of how users interact with the large language model.

Wang's order specifically requires

OpenAI to disclose "anonymized" chat logs by Thursday, or within seven days of "completing the de-identification process."

A similar high-profile dispute came up earlier this

century, in Viacom's copyright infringement lawsuit against Google's YouTube. In 2008, a federal judge presiding over that matter ordered Google to disclose records showing which users watched

particular YouTube videos. Viacom intended to use data about viewing habits to prove that pirated clips are popular with users.

That order drew widespread condemnation by

privacy advocates, and Google and Viacom later agreed to take steps to obscure user IDs and IP addresses by replacing them with anonymous identifiers. (The underlying lawsuit was ultimately settled in

2014.)

OpenAI suggests that a similar procedure won't protect users in this case.

"The logs at issue here are complete conversations: each log in the 20 million sample represents a

complete exchange of multiple prompt-output pairs between a user and ChatGPT," the company argues. "Disclosure of those logs is thus much more likely to expose private information, in the same way

that eavesdropping on an entire conversation reveals more private information than a 5-second conversation fragment."

The company points to a sworn declaration by a member of its technical

staff who said its deidentification procedure is designed to shed certain types of personally identifiable information, passwords and other "sensitive" data, but not content such as health or

financial information.

Open AI on Wednesday also said in a blog post that the Times' demand for

information "disregards long-standing privacy protections, breaks with common-sense security practices, and would force us to turn over tens of millions of highly personal conversations from people

who have no connection" to the litigation.

A spokesperson for the Times accused OpenAI of "fear-mongering," writing in an email to MediaPost that the company's post "misleads

its users and omits the facts."

"No ChatGPT user’s privacy is at risk," the spokesperson stated, adding that OpenAI’s terms of service allow it to harness users'

chats for training, and to disclose chats for litigation.