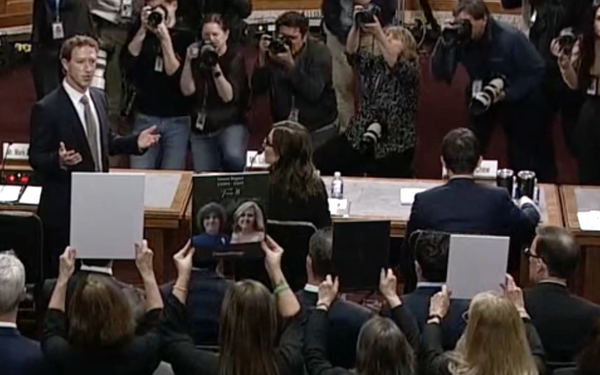

Two months after CEO Mark Zuckerberg was forced by U.S. lawmakers to

apologize to parents who have lost kids to sexploitation on Meta's family of apps, the tech giant has announced an expansion of its child safety tools on Instagram.

The company is testing new

ways to help protect younger Instagram users from falling victim to various forms of intimate image abuse, while also trying to boost awareness surrounding potential sextortion scams across the

image-and-video-sharing app.

First off, Instagram is testing a nudity protection feature in direct messages -- where sextortion scammers often share or ask for intimate images -- that

automatically blurs images detected as containing nudity. The feature will be turned on by default for teens under 18 globally. The company will even show a notification to adult users to encourage

them to turn the feature on.

advertisement

advertisement

When nudity protection is turned on, those sending images containing nudity will see a message reminding them to be cautious when sending sensitive photos, and

that they have the ability to unsend those photos if they choose.

According to Instagram, the feature is designed “not only to protect people from seeing unwanted nudity in their DMs,

but also to protect them from scammers who may send nude images to trick people into sending their own images in return.”

Users who end up sending or receiving nude images on Instagram

will also be directed to safety tips Meta says were developed with guidance from experts, about potential risks, such as the screenshotting, altered relationships, and scams. A link to various

resources -- Meta’s Safety Center, support helplines etc. -- will appear as well.

Instagram says it is also developing a technology that helps identify where

accounts may be engaging in sextortion scams, “based on a range of signals that could indicate sextortion behavior.” If caught, Instagram says it will report the users to the National

Center for Missing and Exploited Children (NCMEC).

In addition, any message requests sent by potential sextortion accounts will go to the recipient’s

hidden requests folder. Those actively chatting to these flagged accounts will receive safety notices encouraging them to report any threats. While the “Message” button on teens’

profiles will vanish for potential sextortion accounts, even if they’re already connected, making it nearly impossible to reach out directly.

“We’re also testing

hiding teens from these accounts in people’s follower, following and like lists, and making it harder for them to find teen accounts in Search results,” Meta wrote in a blog post.

Finally, Instagram is expanding its partnership with Lantern, a program run by the Tech Coalition that enables technology companies to share signals about accounts and behavior that violate their

child safety policies.

“We are hopeful these new measures will increase reporting by minors and curb the circulation of online child exploitation,” said John Shehan, senior vice

president of the National Center for Missing and Exploited Children.

However, some advocates for child safety aren't so hopeful. ParentsTogether, a parent and family nonprofit advocating for a

safer internet for kids, has called Meta's announcement a PR stunt, highlighting the company's previous failures to protect children from predators and sexual exploitation on their apps and sharing

personal trauma.

“Meta has shown zero willingness to engage with parents whose kids have died due to sextortion on Meta platforms so it's hard not to see this announcement as another

cynical attempt to do just enough to avoid growing calls for state and federal legislators to regulate Big Tech,” said Shelby Knox, campaign director at ParentsTogether.

“[Meta

has] ignored us and now, years later, here they are rolling out weak-half-measure,” commented Jenn Markus, who lost her son Braden when he died by suicide 27 minutes after being contacted by a

sextortionist on Instagram. “The only thing it says is that they are not serious about this.”