Meta has officially announced the first

stage of “Community Notes,” a controversial user-dependent content-moderation replacement to the company’s longstanding fact-checking

program.

Despite the concern of its own Safety Council, which believe Community Notes will

put an “unreasonable burden” onto users -- especially groups most vulnerable to long-term, cumulative harm -- Meta plans to begin rolling the feature out early next week across Instagram,

Facebook and Threads.

advertisement

advertisement

Meta CEO Mark Zuckerberg initially announced the company’s plans to launch Community Notes shortly after President Donald Trump

was re-elected, during which time Zuckerberg also said Meta would end “a bunch of restrictions on topics like immigration and gender that are just out of touch with mainstream

discourse.”

Not long after, the company altered its hateful conduct policy to “allow allegations of mental illness or abnormality when based on

gender or sexual orientation,” while also removing a long-held policy that barred users from referring to women as household objects or property and referring to transgender or non-binary people

as “it.”

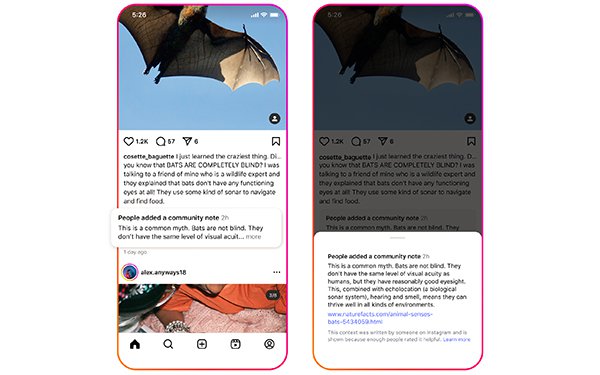

With Community

Notes, which is essentially crowd-sourced fact-checking, Meta says it will not be “reinventing the wheel.”

Aligning more closely with the Trump administration, Meta’s new moderation program will be based on the

open-source algorithm developed by X, the social media platform owned by Trump ally Elon Musk.

According to

Meta, the rating system will take into account each contributor’s rating history and evaluate which contributors usually disagree. Over time, Meta plans to tweak its own algorithm to support how

Community Notes are ranked and rated.

Like X’s, Meta’s process will factor in political bias to

dilute potential conflict between contributors.

“Meta won’t decide what gets rated or written

-- contributors from our community will,” the company says. “And to safeguard against bias, notes won’t be published unless contributors with a range of viewpoints broadly agree on

them. No matter how many contributors agree on a note, it won’t be published unless people who normally disagree decide that it provides helpful context.”

In studies, this approach to moderating misleading or harmful content has proven to be ineffective. According to the Center for Countering Digital Hate (CCDH), 73% of Community Notes

related to political topics on X are never displayed, although they are providing reliable context.

Another

study conducted last month by Spanish fact-checking site

Maldita found that 85% of all Community Notes are never displayed to X users.

Furthermore, X's Community Notes feature has been infiltrated

by groups of contributors who strategically collaborate to up and downvote notes based on their communal ideologies, throwing off the effectiveness of the feature. This is also likely to occur on

Facebook, Instagram and Threads, which have billions more active users.

Meta has decided to first roll out

Community Notes in the U.S. The company says around 200,000 potential contributors have signed up across all three Meta-owned apps.

However, notes won’t initially appear on content, as Meta plans to start by “gradually and randomly” admitting people off the waitlist, and says it will

“take time to test the writing and rating system before any notes are published publicly.”

Over time, as Community Notes rolls out to more users

in more markets, third-party fact-checking will disappear entirely.

While Meta has made it clear that Community Notes will not appear on paid ads, some marketers

are still concerned about their future placements.

“With fewer checks in place, there is a risk that

ads may appear next to unverified content, which could impact user trust and engagement,” Director of Product Marketing and Digital Remedy Gabrielle Turyan told MediaPost. “As a result,

advertisers will need to reassess their brand-safety settings, update exclusion lists, and implement additional content certification measures. Monitoring performance closely will also be key to

identifying any impact on ad effectiveness.”

Overall, Meta is hopeful about its switch to Community Notes, expecting the program to be “less

biased than the third-party fact checking program it replaces, and to operate at a greater scale when it is fully up and running.”

“When we

launched the fact checking program in 2016, we were clear that we didn’t want to be the arbiters of truth and believed that turning to expert fact checking organizations was the best solution

available,” Meta adds. “But that’s not how it played out, particularly in the United States. Experts, like everyone else, have their own political biases and perspectives. This

showed up in the choices some made about what to fact check and how.”

In its announcement, Meta cites a study by the University of Luxembourg that shows Community Notes on X reducing the

spread of misleading posts by an average of over 60%; a Science study

that shows crowdsourced fact-checking to be as accurate as traditional fact-checking, while being more scalable and trustworthy; and a recent study by PNAS Nexus, which finds that the user-provided context of Community Notes results in much higher

trustworthiness than fact-checking.

These findings, however, do not address the possibility that Community

Notes will not be shown to users if contributors don’t agree on the harmfulness of certain content. Taking into account how much misinformation Musk shares on his own X account, it is difficult

to picture his followers agreeing that even his most misleading posts should be removed.

Meta will begin

testing Community Notes in the U.S. on March 18.