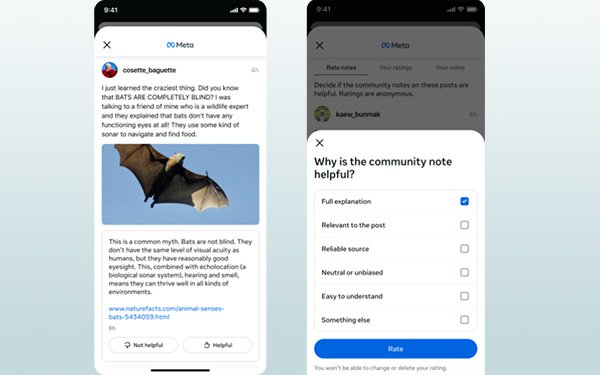

As Meta rolls out the first stage of Community Notes -- a controversial user-dependent

content-moderation program based on X’s current system -- a new report highlights deep-seated flaws in the approach based on its current performance on Elon Musk’s social-media app.

According to Bloomberg’s recent analysis of 1.1 million Community Notes on X, posted between the start of 2023 and last month, the system has “fallen well short of counteracting the

incentives, both political and financial, for lying, and allowing people to lie.”

“Furthermore, many of the most cited sources of information that make Community Notes function are

under relentless and prolonged attack -- by Musk, the Trump administration, and a political environment that has undermined the credibility of truly trustworthy sources of information,” the news

source continues.

advertisement

advertisement

Since Musk integrated Community Notes into X, shortly after he purchased the app in 2022, the content-moderation approach has become a symbol for

the billionaire’s “free speech” mission, in which users have the power to bypass traditional gatekeepers and decide what content is acceptable and accurate.

There are

reputable studies that showcase the benefits of crowdsourced fact-checking, highlighting the approach's ability to reduce the spread of misleading posts by an average of over 60% -- or that Community Notes can be as accurate as traditional fact-checking, or even that the approach results in much higher trustworthiness than fact-checking.

However, the majority of notes regarding divisive

topics are never displayed, because users cannot agree, which highlights the approach’s major downfall -- users must agree on a note's validity for it to be publicly shown.

According to

Meta, “notes won't be published unless contributors with a range of viewpoints broadly agree on them…it won't be published unless people who normally disagree decide that it provides

helpful context.”

In studies, this approach to moderating misleading or harmful content has proven to be ineffective. According to the Center for Countering Digital Hate (CCDH), 73% of

Community Notes related to political topics on X are never displayed, although they are providing reliable context.

Another study conducted last month by Spanish fact-checking site Maldita found that 85% of all Community Notes are never displayed to X

users.

Bloomberg found that even notes that are published and initially rated “helpful” get removed 26% of the time after disagreement ensues.

“The removal rate is

even higher for certain contentious topics and figures,” Bloomberg notes. “From a sample of 2,674 notes about Russia and Ukraine in 2024, the data suggests more than 40% were unpublished

after initial publication. Removals were driven by the disappearance of 229 out of 392 notes on posts by Russian government officials or state-run media accounts, based on analysis of posts that were

still up on X at the time of writing.”

As other studies have shown, Bloomberg adds that pro-Russia voices corral their social-media followers to strategically vote against a proposed or

published note. This may be even more likely to happen across Facebook, Instagram and Threads, which have billions more active users than X.

Despite Musk's insistence that crowdsourced

fact-checking counteracts the untrustworthy influence of legacy media, Bloomberg found that ironically, mainstream media sources make up the backbone of Community Notes on X.

“Sites

categorized by online security group Cloudflare as ‘news & media’ and ‘magazines’ accounted for 31% of links cited within notes,” Bloomberg notes. “Social

networks were the next leading category with 20%, followed by educational sites with 11%.”

Bloomberg's analysis shows that the top 40 most-referenced domains within Community Notes --

which accounted for 50% of all notes on the platform -- included Reuters, The BBC, and NPR. These are three news sources Musk has publicly attacked.

Furthermore, in a random sampling of

400 notes citing X posts a source showed 12% being posted by professional journalists and media organizations, and in a sample of 400 random YouTube clips, mainstream media footage was present in

29%.

But despite consistent reportage of Community Notes’ flaws and its own Safety Council’s warnings, Meta is moving ahead

with the program, implementing it across its most popular social apps, used by a total of 3.3 billion people across the globe.