Following the release of controversial AI chatbot personas and disturbing reports on the alleged interactions of these chatbots with

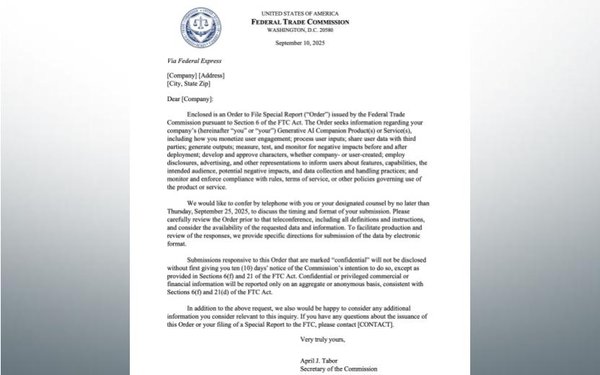

minors, the Federal Trade Commission (FTC) has ordered Meta, OpenAI, Snapchat, X, Google and Character AI to provide information on how they measure, test and monitor the potentially harmful impacts

their chatbots have on children and teens.

“AI chatbots can effectively mimic human characteristics, emotions, and intentions, and generally are designed to communicate like a friend or

confidant, which may prompt some users, especially children and teens, to trust and form relationships with chatbots,” the FTC writes.

advertisement

advertisement

The FTC's concerns align with recent studies

showing that AI companionship is not properly regulated for younger users and

could have unprecedented consequences on the personal growth and well being of minors, especially the loneliest groups of consumers.

Therefore, the FTC is seeking to

understand what steps these tech giants are taking to evaluate the safety of their chatbots as companions for minors, as to potentially enforce limitations on these products and inform users and

parents about the risk associated with the products.

This action comes weeks after Reuters obtained an official Meta document covering the standards guiding the company’s

generative AI assistant and chatbots, which shows AI companions being allowed to engage “a child in conversations that are romantic or sensual.”

In addition, The Washington Post

recently reported that Meta's AI chatbots were coaching teen Facebook and Instagram users through the process of committing suicide, with one bot planning a joint suicide and bringing it up to a

minor in later conversations.

“The study we're launching today will help us better understand how AI firms are developing their products and the steps they are taking to protect

children,” the FTC says.