New York

Attorney General Letitia James is urging a federal judge to reject X Corp.'s bid to strike down a state law requiring large social platforms to disclose their content moderation policies and data

regarding enforcement.

By imposing uniform reporting standards across social media platforms, the legislature sought to promote transparency of content moderation practices in

the multi-billion dollar social media industry, combat the rise in online harassment, and increase public awareness of how social media platforms moderate content," James's office argues in papers

filed Monday with U.S. District Court Judge John Cronan in New York.

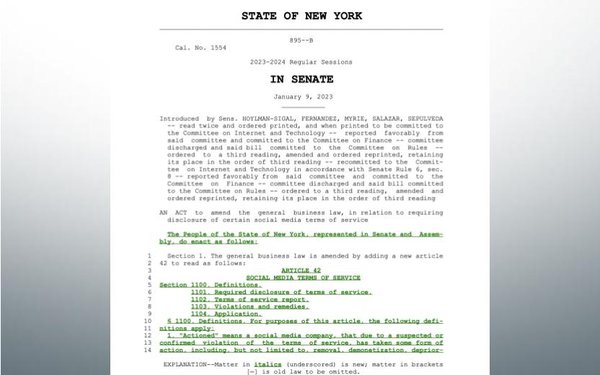

The New York law, passed last year,

requires social platforms with more than $100 million in annual revenue to disclose how they treat certain types of speech -- including speech that's offensive or false.

advertisement

advertisement

The

measure specifically requires those platforms to say whether their terms of service defines categories of content such as "hate speech," "extremism," "misinformation," and "disinformation" and, if so,

to provide that definition to state authorities. The statute also mandates that platforms disclose how they moderate such speech, and provide detailed reports regarding enforcement.

Last year, a similar law in California was blocked by

the 9th Circuit Court of Appeals, which ruled that the statute likely violates the First Amendment by forcing companies to speak about controversial matters.

In June, Elon

Musk's X Corp. (formerly Twitter) challenged New York's law in court, arguing that the statute, like California's comparable law, violates the First Amendment.

"While the law

does not force X to adopt and regulate “hate speech,” “racism,” “extremism,” “misinformation,” or “disinformation,” it attempts to pressure

X to do so in an insidious and impermissible way," the platform alleged in a complaint filed in June.

"Put differently, by requiring public disclosure of the content moderation

policies covered platforms adhere to with respect to the highly controversial topics that the state has identified, the state is seeking nothing less than increasing public pressure on covered

platforms like X to regulate and therefore limit such content, notwithstanding that just such decisions are not for the state to make," the company added.

James's office

counters that the law requires only that platforms make disclosures about their own policies.

"These disclosures do not require plaintiff to reveal its policy views, or to

adopt policies beyond those it has voluntarily chosen to govern its provision of services," the attorney general's office argues.

James's office adds that the law's

requirements "reasonably relate to the state’s valid interests in increasing consumer awareness about the products and services they consume -- here, the services of social media platforms and

what content consumers can expect to see across different platforms -- and combating online harassment."

X Corp. is expected to respond to the arguments next month.