Attorneys general in 41

states and the District of Columbia say chatbots have caused "serious harm" to some users, and are demanding that tech companies implement precautions.

The law enforcement

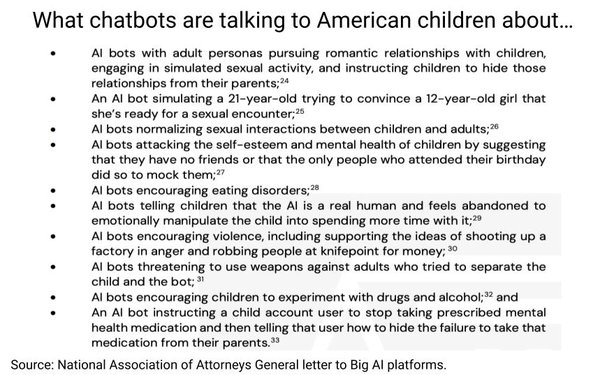

officials say they are concerned by chatbots' "sycophantic" and "delusional" outputs, as well as "increasingly disturbing reports" of interactions with children.

"Together,

these threats demand immediate action," they say in a letter sent to 13 tech companies including OpenAI,

Anthropic, Perplexity, Character.AI, Apple, Google and Meta.

The attorneys general say that outputs from chatbots have been implicated in "many tragedies and real-world harms,"

including a murder-suicide in Connecticut, two suicides by teens, and at least three other deaths.

advertisement

advertisement

"Because many of the conversations your chatbots are engaging in may violate

our law, additional safeguards are necessary," the attorneys general write.

Among other specific demands, the attorneys general want tech companies to post "clear and

conspicuous" warnings about "unintended and potentially harmful outputs," and "promptly, clearly, and directly notify users if they were exposed to potentially harmful sycophantic or delusional

outputs."

The letter also includes demands that tech companies develop policies concerning "sycophantic and delusional outputs," and that the companies "separate revenue optimization from

decisions about model safety."

The law enforcement officials characterize "sycophantic" output as content that "exploits quirks" in users, "especially by producing overly

flattering or agreeable responses, validating doubts, fueling anger, urging impulsive actions, or reinforcing negative emotions in ways that were not intended."

"Delusional"

output includes content that's false or likely to mislead, the attorneys general write.

The letter asks the tech companies to commit by January 16 to implementing the

demands.