From improving search functionality to better policing hate speech, there are many reasons why Facebook would want a more efficient way to decipher text inside of images and videos.

Enter Rosetta -- The AI that Facebook built in-house is being deployed across Instagram and its flagship platform.

From memes to street signs to restaurant menus, the machine-learning

system is already extracting text from more than 1 billion public Facebook and Instagram images and video frames, daily and in real-time.

The system inputs all this text -- in various

languages -- into a recognition model that has been trained on classifiers to understand the context of the text and the image together.

The point of this is to understand the context in which

text appears in images and video, which is important for the purposes of search categorization and policing.

advertisement

advertisement

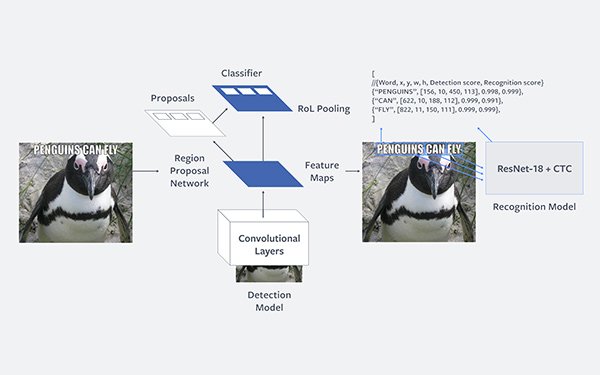

Rosetta performs text extraction on an image in two independent steps, including

detection and recognition. First, it detects rectangular regions that potentially contain text, and then performs text recognition. For each of the detected regions, it uses a convolutional neural

network (CNN) to recognize and transcribe the word in the region.

The system is also being used to improve the accuracy of classification of photos and video in users’ News Feeds in

order to prioritize more personalized content.

Yet Facebook admits that Rosetta is hardly a prefect system. Among other issues, extracting text efficiently from videos remains a work in

progress, and Facebook continues to experiment with different solutions.

With more than 2 billion users worldwide, the sheer volume of languages that appear on Facebook and Instagram presents

another significant challenge.

By its own admission, Facebook has been struggling to suppress the spread of inappropriate content -- from hate speech and threats of violence to disinformation

and “fake news” across its immense platform.

In one recent instance, the tech titan admitted

it had not acted quickly enough stop the spread of ethnic hate and violence across Myanmar.

Regarding Facebook’s content moderation technology, Guy Rosen, vice president, product

management, recently conceded: “Our technology still doesn’t work that well, so it needs to be checked by our review teams.”

In the first quarter of the year,

Facebook said it removed roughly 2.5 million pieces of hate speech -- only 38% of which was flagged by automated systems.