Brand safety is increasingly denying brands and

advertisers an opportunity to target the exact audiences that they want to reach, according to new research published by technology platform CHEQ.

"We knew safe content was getting

wrongfully blocked by keyword blacklists, but didn't imagine it would be as high as 57%," says Guy Tytunovich, founder/CEO, CHEQ.

This keyword-blacklist practice renders some content

that doesn’t pose a brand safety threat unmonetizable for publishers. More than nine in 10 publishers (93%) claim brand safety solutions are hurting their revenues through

over-blocking.

One publisher explained: “We have had times in the past when block rates are high and the clients have turned around and said they are not going to rebook, or where

we have had to refund them."

advertisement

advertisement

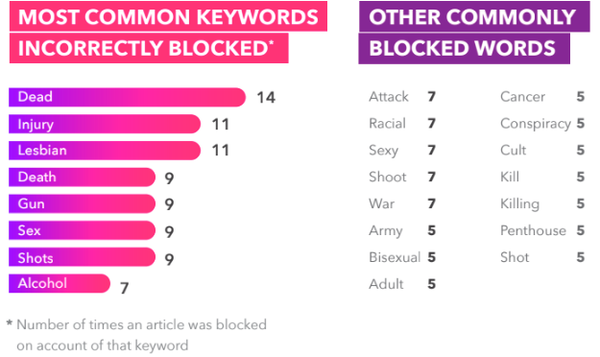

The most common incorrectly blocked keywords on brand safety grounds are variations of “dead," with CHEQ finding 18% of stories are blocked from

receiving revenue after using that term. While death may have some negative connotations potential advertisers were blocked at the New York Times as Woodstock festival organizers

declared plans to revive the event as “dead.” Also blocked: any article that mentioned rock band The Grateful “Dead.”

One of the most prevalent forms of

blocking happens around entertainment. Two thirds of content relating to movies and TV (65%) was flagged in CHEQ's analysis. One notable example: Emmy-nominated "Killing Eve" which was blocked

three times and even Tom Cruise's return in "Top Gun" ("gun") was blocked twice. Disney found itself in the crosshairs as "The Lion King" was blocked for "death."

This issue

has wider implications as keyword-based brand safety platforms are hurting minority voices, such as LGBT communities, states the CHEQ report. One of the more surprising findings was seeing that LGBTQ

content is becoming almost un-monetizable at this point, with 73% being blocked, says Tytunovich.

"We believe marketers should take a stand against keyword blacklists," he says.

"This will inevitably put pressure on more ad-verification companies to develop better technological solutions which can enforce brand safety without limiting reach, over-blocking safe content and

damaging entire commiunities, as is the case with the LGBTQ content blocking," says Tytunovich.

The use of AI is one key technology that offers the ability to understand, with a

human degree of accuracy, the exact meaning of online content without resort to keywords, the report recommends. "In milliseconds, AI can confirm with human-levels of accuracy what a piece of content

is — and is not," he says.