A common trope

among mobilistas in recent years (yours truly included) has been that mobile technology forces advertisers and media to consider a wider set of user “moods and modes” than previous

generations of media ever imagined. One function of untethering media from predictable circumstances is that both ads and content have to anticipate their being consumed in a much broader range of

circumstances and by users who are in different modes of use. Apple is taking this seriously enough to submit a patent for methods of targeting user “moods.”

A common trope

among mobilistas in recent years (yours truly included) has been that mobile technology forces advertisers and media to consider a wider set of user “moods and modes” than previous

generations of media ever imagined. One function of untethering media from predictable circumstances is that both ads and content have to anticipate their being consumed in a much broader range of

circumstances and by users who are in different modes of use. Apple is taking this seriously enough to submit a patent for methods of targeting user “moods.”

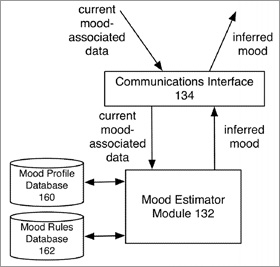

I usually don't put

too much stock in patent stories, since so many of applications lie dormant after being submitted just to cover all bases. But this one is fascinating. It was submitted by Apple this week (first reported by Apple Insider) and entitled “Inferring User Mood Based On User And Group Characteristic

Data.” Drill through the weird patent-speak and Apple is describing a technique for establishing and targeting content (presumably ads too) on what is loosely called here “mood” as

established by a lot of historical and contextual data points. Basically, they are proposing to create for individual users a “mood profile” that is used as a baseline against which

“mood-associated data” can be compared to infer a specific mood the user is in at the time.

advertisement

advertisement

Apple is conceiving a wide range of possible uses here, from pushing the right content

to the user, inviting them to engage or accept certain content (which could be code for “ads”) and even changing settings on the device itself based on inferred mood. The patent

applications states: “For example, a content delivery system can use the inferred mood to assign a user to a mood segment, prioritize targeted content packages assigned to a user, and/or select

an item of invitational content based on a match between a mood tag and the user's inferred mood. Additional or alternative uses of the inferred mood are also possible. For example, the inferred mood

can be used to enable, disable, set, and/or adjust features on a client device.”

The application does go on to include advertising as a possible part of a targeted content package.

But it is defining the data points that constitute “mood” where things get really interesting. I am going to quote this section entirely because of the many elements Apple is

considering measuring here. It is staggering.

“For example, mood-associated characteristics can be physical characteristics, behavioral characteristics, and/or spatial-temporal

characteristics. Mood-associated physical characteristics can include heart rate; blood pressure; adrenaline level; perspiration rate; body temperature; vocal expression, e.g., voice level, voice

pattern, voice stress, etc.; movement characteristics; facial expression; etc. Mood-associated behavioral characteristics can include sequence of content consumed, e.g. sequence of applications

launched, rate at which the user changed applications, etc.; social networking activities, e.g., likes and/or comments on social media; user interface (UI) actions, e.g. rate of clicking, pressure

applied to a touchscreen, etc.; and/or emotional response to previously served targeted content. Mood-associated spatial-temporal characteristics can include location, date, day, time, and/or daypart.

The mood-associated characteristics can also include data regarding consumed content, such as music genre, application category, ESRB and/or MPAA rating, consumption time of day, consumption location,

subject matter of the content, etc. In some cases, a user terminal 102 can be equipped with hardware and/or software that facilitates the collection of mood-associated characteristic data. For

example, a user terminal 102 can include a sensor for detecting a user's heart rate or blood pressure. In another example, a user terminal 102 can include a camera and software that performs facial

recognition to detect a user's facial expressions.”

No joke. They are talking about targeting by blood pressure, perspiration rates, emotional response to content, etc.

Clearly,

they are considering devices that are making intimate physical contact with the user and feeding the most detailed user data imaginable into a targeting engine.