Russian disinformation operatives placed about 3,000 paid ads on Facebook over the past two years, according to findings of an investigation released Wednesday afternoon by the social

network.

“In reviewing the ads buys, we have found approximately $100,000 in ad spending from June of 2015 to May of 2017 — associated with roughly 3,000 ads — that

was connected to about 470 inauthentic accounts and pages in violation of our policies,” Facebook Chief Security Officer Alex Stamos wrote in a post on Facebook’s corporate news site.

“Our analysis suggests these accounts and pages were affiliated with one another and likely operated out of Russia,” he continued, adding that the use of inauthentic accounts

violates Facebook’s policies and that the company has shut down the accounts and pages it was able to identify.

Stamos said the probe is part of an ongoing investigation that

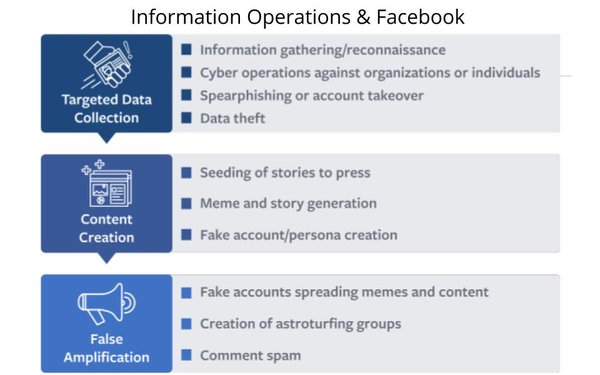

follows the role Facebook played in Russia’s active measures leading up to and following the 2016 Presidential campaign. In April, Facebook published a white paper outlining what it understood

at the time about “organized attempts to misuse” its platform.

advertisement

advertisement

Stamos said Facebook has already shared its findings with U.S. authorities, which have been investigating a

wide range of active measures by Russia to spread propaganda and disinformation — something counterintelligence experts describe as a form of “hybrid warfare.”

Stamos said Facebook’s probe "looked for ads that might have originated in Russia — even those with very weak signals of a connection and not associated with any known organized

effort. This was a broad search, including, for instance, ads bought from accounts with US IP addresses but with the language set to Russian — even though they didn’t necessarily violate

any policy or law. In this part of our review, we found approximately $50,000 in potentially politically related ad spending on roughly 2,200 ads."

Stamos said highlights of the

findings include:

- The vast majority of ads run by these accounts didn’t specifically reference the US presidential election, voting or a particular candidate.

- Rather, the ads and accounts appeared to focus on amplifying divisive social and political messages across the ideological spectrum — touching on topics from LGBT matters to race issues to

immigration to gun rights.

- About one-quarter of these ads were geographically targeted, and of those, more ran in 2015 than 2016.

- The behavior displayed

by these accounts to amplify divisive messages was consistent with the techniques mentioned in the white paper we released in April about

information operations.

Stamos said Facebook is updating its automated systems to help weed out inauthentic activity, and outlined the following steps:

- applying machine

learning to help limit spam and reduce the posts people see that link to low-quality web

pages;