Did personalization create

bias?

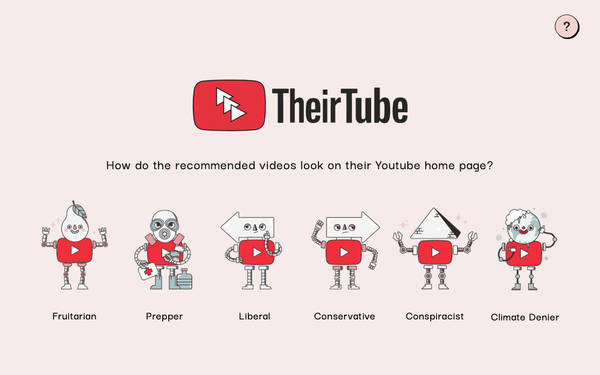

It's clear that search engines have bias -- from environmental to scientific and political. Now a project from web-browser Mozilla details just how much search engines and

recommendation algorithms can sway opinion.

YouTube’s recommendation algorithm accounts for nearly three-quarters of all videos viewed on the site, trapping people into searching and reading information that is specific to their world views.

The

Mozilla-funded project TheirTube now gives people an opportunity to experience what YouTube

recommends for friends and family who have different perspectives on politics, climate change, and even seemingly innocuous subjects like diets.

advertisement

advertisement

TheirTube was developed by Amsterdam-based

designer Tomo Kihara with a grant from Mozilla’s Creative Media Awards.

The awards support projects exploring how AI is reshaping society in good and bad ways. Interviews with real

YouTube users who experienced “recommendation bubbles” also helped to create the project.

Six YouTube accounts were created to simulate the interviewees’ subscriptions and

viewing habits. The accounts include Fruitarian, Prepper, Liberal, Conservative, Conspiracist, and Climate Denier.

The project builds on Mozilla’s efforts to pressure YouTube to become

more transparent about its recommendation algorithm.

In August 2019, Mozilla wrote YouTube a letter raising issue about harmful bias and urged the company to openly work with independent

researchers to understand the scale. The two companies met about a month later. YouTube execs acknowledged the problem and explained how they would fix it, including removing content that violates

their community guidelines and reducing recommendations of "borderline" content.

Not enough was done to stop the problem, according to Mozilla, so in October 2019, the company published

YouTube users' #YouTubeRegrets, a series of videos that the company says were triggered by the platform's algorithm bizarre and dangerous recommendations — from child exploitation to

radicalization.