Microsoft expanded on its Bing Search

generative AI (GAI) and Deep Search features on Tuesday -- delving into emotions and informational structures such as those related to how-to and click-to-do queries. The ability to make purchases

from retailers is on the product road map.

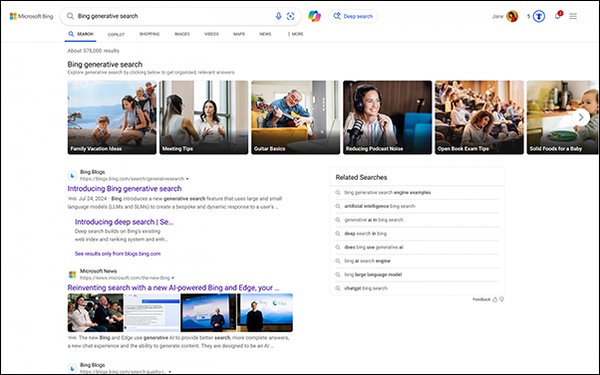

Bing has optimized search results, as well as how those results appear in the layout. Users can explore capabilities of Bing generative search in the

U.S. by typing “Bing generative search” into the search bar. A carousel of queries enables

users to select and demo the features.

advertisement

advertisement

The features are supported by a mix of AI models. Bing Generative Search aggregates information from across the web to generate a summary in

response to search queries. There's also an option to dismiss AI-generated summaries for traditional search results from the search page if requested.

“Bing

Generative Search goes beyond simply finding an answer,” Microsoft wrote in a blog post. “Instead,

it understands the search query, reviews millions of sources of information, dynamically matches content, and generates search results on the fly.”

Microsoft acknowledges that it

is still under development, but the feature will launch this new experience where users can more easily trigger Bing generative search for informational queries.

Microsoft AI

CEO Mustafa Suleyman is overseeing the changes that gives Copilot a voice, the ability to see, and advanced reasoning skills that in part uses technology like OpenAI Strawberry.

Suleyman --

who is co-founder of Deep Mind, acquired by Google in 2014 -- has a vision of what the future of AI looks

like, and it’s not what most would imagine. The latest features announced today are the first step in true AI agents.

He told Wired the first stage is AI processing the same information

you process—seeing what you see, hearing what you hear, consuming the text that you consume.

The second phase is when AI has a long term, persistent memory that creates a shared

understanding over time.

The third stage is AI interacting with third parties by sending instructions and taking actions—to buy things, book things, plan a schedule. The feature is in

research and development being tested.

“It's a way off, but yes, we've closed the loop, we've done transactions,” he said. “The problem with this technology is that you can

get it working 50, 60% of the time, but getting it to 90% reliability is a lot of effort.”

He admits to seeing demonstrations where the agent can independently make a purchase, but also

some “car crash moments where it doesn't know what it's doing.”

Under Suleyman’s guidance, Microsoft has taken the first steps to add reasoning and vision capabilities into

its AI Copilot models, making them available in beta within a new experimental site dubbed Copilot Labs.

The AI features will be made available to a small number of users. Those who trial them

will provide feedback, so Microsoft can learn and then apply the advice back into the products.