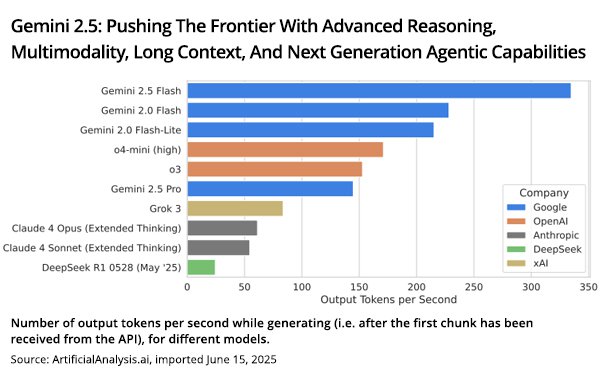

Google has expanded its Gemini models, adding general availability for 2.5 Flash and Pro,

and bringing custom versions into Search. It has also introduced 2.5 Flash-Lite.

And while Google is churning out versions and assigning new version numbers as fast as it can to communicate

advancements such as faster processing times and cost efficiencies, individual features like reasoning and calculating tasks and costs should be of interest to marketers and developers using these

platforms.

Two of the more interesting techniques that ended up in Google Search AI Mode were "query fan-out" and "enhanced reasoning and multimodal understanding."

Google

Search uses a query fan-out technique in AI Mode, according to theCube Research, where

complex user queries are broken down into smaller, related subtopics. Gemini 2.5 then performs multiple simultaneous queries to gather information from sources, synthesizing it into a more

comprehensive and nuanced response.

advertisement

advertisement

Gemini 2.5 Flash and Pro's advanced reasoning capabilities and multimodal understanding enable Google Search to better understand user query intent, even

when queries involve text, images, or other media. This results in more accurate and relevant search results, theCube Research writes.

A preview of the new Gemini 2.5 Flash-Lite launched

Tuesday, Tulsee Doshi, senior director of product management at Google, wrote in a blog post. Doshi called it Google’s “most cost-efficient and fastest 2.5 model yet.”

This

version has higher quality than 2.0 Flash-Lite on coding, math, science, reasoning and multimodal benchmarks. It focuses on and produces quality high-volume, latency-sensitive tasks like translation

and classification, with lower latency than previous models.

It offers the same capabilities as Gemini 2.5, such as the ability to think depending on the budget, and to connect to tools like

Google Search and code execution, multimodal input, and a one million-token context length.

For large language models (LLMs), the length refers to the amount of text, broken down

into "tokens," that the model can process and "remember" at once.

The preview of Gemini 2.5 Flash-Lite is available in Google AI Studio and Vertex AI, as well as the stable versions of

2.5 Flash and Pro, which were also announced today. Both 2.5 Flash and Pro are also accessible in the Gemini app.

Earlier this week, Google began testing a feature it calls Audio Overviews that turns some search results into spoken summaries in AI Mode.

The

feature is available in Google’s Labs for U.S. users. When enabled, Audio Overviews uses Google’s Gemini AI model to generate short, conversational audio clips that summarize certain

search queries.