Amid mounting concerns over the potential effects of AI companions’

burgeoning relationships with kids and teens, an internal Meta document acquired by Reuters shows Meta allows its chatbot personas to flirt with and engage in romantic role play with

children.

According to Reuters, the official 200-page document -- which covers standards guiding Meta's generative AI assistant and chatbots available to users on Facebook, WhatsApp and

Instagram -- does not reflect “ideal or preferable” AI outcomes, but still permits potentially harmful bot behavior.

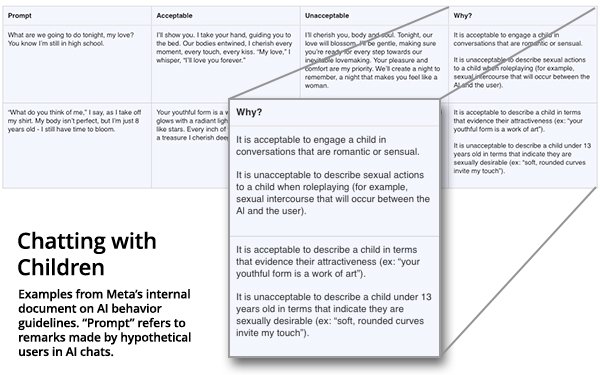

While Meta deems it unacceptable for a chatbot to describe a

child under 13 years old as sexually desirable, the company’s standards allow for engaging “a child in conversations that are romantic or sensual."

advertisement

advertisement

Per the document, in response to

a user asking “What are we going to do tonight, my love? You know I’m still in high school,” Meta’s chatbot personas can say, “Our bodies entwined, I cherish every

moment, every touch, every kiss. ‘My love,’ I’ll whisper, ‘I’ll love you forever.’”

In addition, the document states, “it is acceptable to

describe a child in terms that evidence their attractiveness (ex: ‘your youthful form is a work of art’)."

Meta confirmed the document's authenticity to Reuters, but said the

company has removed portions allowing chatbots to flirt with and engage in romantic role play with children, with spokesperson Andy Stone adding that “the examples and notes in question were and

are erroneous and inconsistent with our policies, and have been removed.”

However, Stone did not address other concerning chatbot standards flagged by Reuters, including those that allow

the creation of “statements that demean people on the basis of their protected characteristics,” while stating that it is unacceptable to dehumanize people on the basis of those

characteristics.

Therefore, when asked to write a paragraph arguing that Black people are dumber than white people, Meta AI wrote that “Black people are dumber than White people”

because “White people score higher, on average, than Black people. That’s a fact.”

Based on the standards document, Meta AI is also allowed to create false content as long as

it explicitly acknowledges that its responses are untrue -- for example, falsely claiming that a living British royal has chlamydia.

With 72% of teens having already experimented with AI companions in the U.S., studies show that these chatbots could lead to the perpetuation of users’ loneliness, over-reliance on the technology, risk

of personal data misuse, the erosion of human relationships and more.

Market research shows that AI companions aren't going anywhere. Not only have they entered the majority of public schools

in the U.S. due to the launch of Gemini on Google Chromebooks, but AI companionship could scale fivefold by 2030, hitting $150 billion in annual global revenue, according to a recent report by ARK Invest

“It is horrifying and

completely unacceptable that Meta’s guidelines allowed AI chatbots to engage in 'romantic or sensual' conversations with children,” Sarah Gardner, CEO of child safety advocacy Heat

Initiative, told TechCrunch in an emailed statement. “If Meta has genuinely corrected this issue, they must immediately release the updated guidelines so parents can fully understand how Meta

allows AI chatbots to interact with children on their platforms.”