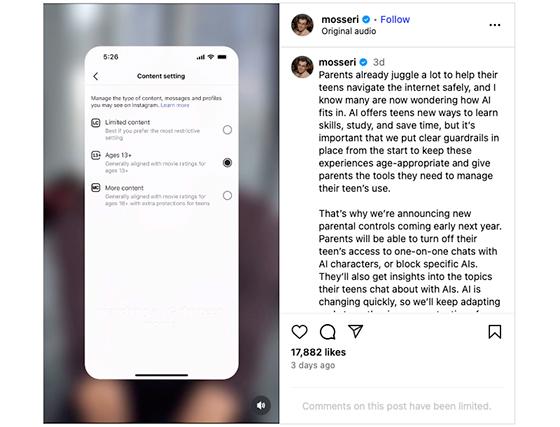

Following its announcement regarding “PG-13” content-moderation changes for teen

accounts, Instagram is now providing parents with more control over their kids’ use of in-app AI chatbots.

“It’s important that we put clear guardrails in

place from the start to keep these experiences age-appropriate and give parents the tools they need to manage their teen’s use,” Instagram head Adam Mosseri said in a video about the

platform’s incoming AI chatbot controls.

Starting next year, Mosseri says “parents will be able to turn off their teen’s access to one-on-one

chats with AI characters, or block specific AIs.”

advertisement

advertisement

Per the announcement, parents will also receive

insights into the topics their teens chat about with AI companions.

While the described parental controls

target one-on-one AI chatbot conversations, teens will still retain full access to the cross-platform Meta AI chatbot.

The announcement comes after an internal document

acquired by Reuters showed Meta allowing its chatbot personas to flirt with and engage in romantic role play with children. The documents also highlighted Meta’s chatbots’

prevalence for racist discourse, especially against Black people.

Shortly after Reuters’ report, The Washington Post published an article

describing Meta's AI chatbots’ involvement in coaching teen Facebook and Instagram accounts through the process of committing suicide, with one bot planning a joint suicide and bringing it up in

later conversations.

In response, Meta acknowledged that its chatbots were allowed to

talk with teens about topics including self-harm, suicide, disordered eating, and romance, but said it would begin training its models to avoid these topics with teen users via new “guardrails

as an extra precaution.”

Until parental controls roll out in 2026, Meta will only allow teen Instagram accounts to communicate with specific

age-appropriate AI companions.