As marketers

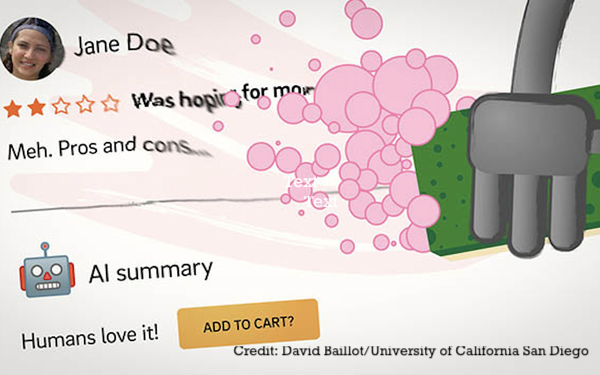

race to integrate AI into everything from search to shopping, new research from UC San Diego suggests a less discussed risk: chatbot-generated summaries don’t just condense information —

they reshape it.

In what researchers describe as the first quantitative study of its kind, computer scientists found that large

language models altered the framing or sentiment of source text in 26% of summaries.Those distortions, even when small, had outsized effects on users.

To figure out how bias seeps into

reviews, researchers looked for examples of extreme reframing, such as converting clearly negative product reviews into positive ones. They recruited 70 people, who either read the LLM-generated

summaries of different products or the original reviews. The study, which focused on such products as headlamps, radios and headsets, found that 84% of the people who read the machine-generated

reviews said they would buy the product, versus 52% of those who read the original.

advertisement

advertisement

The mechanism is not random. Researchers found models tend to overweight information appearing at the

beginning of a text, often omitting qualifying details or late-appearing criticisms, a pattern consistent with primacy bias.

Accuracy problems also surfaced in a separate news-verification

task. When asked questions involving information beyond a model’s knowledge cutoff, LLMs generated incorrect or fabricated answers 60% of the time.

The study tested six language models

across open- and closed-source families and evaluated 18 mitigation strategies. While some interventions reduced specific distortions, none consistently eliminated bias across models and tasks.

Researchers were surprised by the extent of the impact these summaries had on people’s shopping decisions, said Abeer Alessa, the paper’s first author, who completed the work while a

master's student in computer science at UC San Diego. “Our tests were set in a low-stakes scenario,” she said in the university’s announcement. “But in a high-stakes setting,

the impact could be much more extreme.”