Bing and Google search results now serve real-time

information in the Meta AI assistant built on the company’s largest language model (LLM) Llama 3, which is being integrated into the search box of Instagram, Facebook, and WhatsApp.

A

new partnership with Alphabet’s Google includes real-time search results in the assistant’s responses, supplementing an existing arrangement with Microsoft’s Bing

In

September 2023, Microsoft announced it was working with Meta to integrate its AI copilot

experiences to help people navigate any task.

It’s not clear what the partnership agreement looks like or whether or not that same agreement was signed with Google to license real-time

search content.

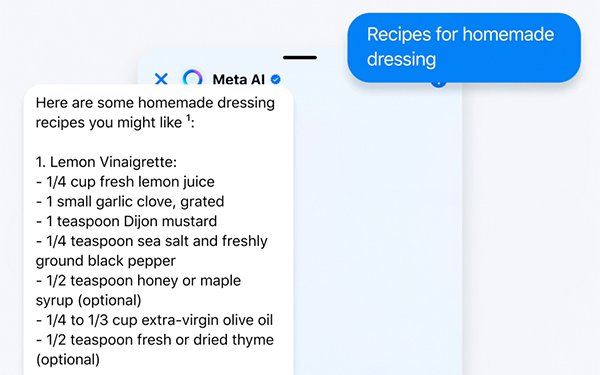

Meta determines which search engine results will serve as the answer to the query. What makes this search option so powerful across the Meta platform

is the ability to access relevant information without switching platforms.

Planning a trip in a Messenger group chat allows users to ask the assistant

to find flights and determine the least crowded time to travel, for example. A user also can ask Meta AI for more information about counted posted on the

web.advertisement

advertisement

For example, if someone sees a photo of the northern lights in Iceland, they can ask Meta AI what time of year is best to check out the aurora

borealis.

Meta CEO Mark Zuckerberg posted a video in January to Instagram outlining his goals as

well as the changes to the organizational chart for the company.

Another important change, Meta AI’s image generation can now create animation and render high-resolution images in real

time as the user types.

Previously, Meta AI had only been available in the U.S. It is now begun rolling out in English to Australia, Canada, Ghana, Jamaica, Malawi, New Zealand, Nigeria,

Pakistan, Singapore, South Africa, Uganda, Zambia, and Zimbabwe.vMore countries and languages will become available soon.

Meta also introduced two open-source Llama 3 models for outside

developers to freely use. One is an 8-billion parameter model and a 70-billion parameter one, both are accessible on all major cloud providers.

Amazon Web Services

on Thursday announced Meta Lama 3 is now available on Amazon SageMaker JumpStart, and on Amazon Bedrock since last year.

In an email to MediaDailyNews, Amazon said Bedrock is the first

and only managed service to offer all of Anthropic Claude 3 models—Opus, Sonnet, and Haiku—as generally available.

The pace of development of Llama 3 is an example of how quickly

AI models are scaling. Llama 2, the largest version released last year, had about 70 billion parameters. This next version of Llama 3 will have more than 400 billion, according to Meta CEO Mark

Zuckerberg.

To train the Llama 3 models, Meta combined three types of parallelization: data, model, and pipeline. Our most efficient implementation achieves a compute utilization of more than

400 TFLOPS per GPU when trained on 16K GPUs simultaneously, the company wrote in a blog post.

Training runs were preformed on two custom-built 24K GPU clusters. The company also

developed an advanced new training stack that automates error detection, handling, and maintenance.

Other high-tech developments also were made such as new scalable storage systems that

reduce overheads of checkpointing and rollback. The improvements resulted in a more effective training time of more than 95%.