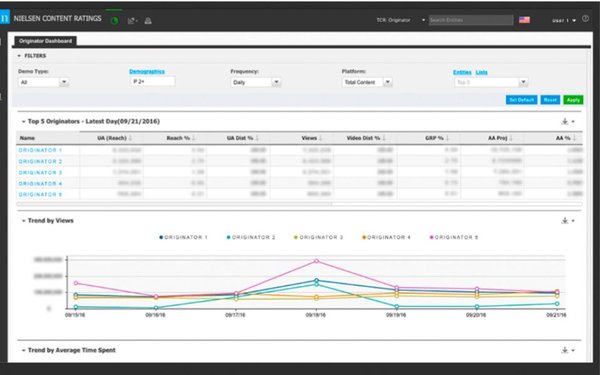

NBCU isn’t happy about the progress of Nielsen’s highly anticipated Total Content Ratings -- the TV research company’s effort to consolidate all traditional, digital, and other

TV/video viewing under one measurement system.

In a letter written by Linda Yaccarino, chairman of advertising sales and client partnerships at NBCUniversal, and obtained by Television News

Daily, she notes a number of problems.

Total Content Ratings are needed, she says, but adds: “TCR in its current form fails to deliver on this objective and does much more harm

than good. Bottom line, it’s not ready for release.”

Yaccarino then lists a number of problems: That TCR has “limited” participation from the industry, because many

leading pay TV and digital operators have not yet incorporated TCR. Also, TCR uses “disparate digital data collection using a mix of panel census custom measurement (YouTube) that is not yet

vetted.”

advertisement

advertisement

Other issues cited: VOD measurement comes from panels, not census level measurement; TCR provides incomplete OTT viewership. In addition, TCR has “no quality control

measure for digital fraud, viewability, or completion rate.

NBCU also worries about using Facebook data for “deduplication of audiences that is also not tested.” It believes TCR

fails to measure out-of-home viewing. Yaccarino also writes that TCR “lacks standardized reporting hierarchy across all measured entities."

She concludes: “TCR currently is an

incomplete, inconsistent measurement 'ranker' wherein the reported output is fundamentally inaccurate.”

Responding to NBC, a Nielsen spokesman says: “Total Content Ratings is on

schedule to syndicate data on March 1 [2017], at which time Nielsen clients will be able to use the data for external purposes.”

He adds that TCR is not in its final release form

-- and should be considered that way. “Up until this time, the data being released to publishers and, subsequently, to agencies is for internal evaluation only.... We continue to enhance and

refine our product with ongoing updates as we work with clients during this period of evaluation.

“Nielsen does not stipulate which measurements clients should enable, nor the order in

which they should enable them. Total Audience Measurement is designed to provide media owners with utmost flexibility to enable the components based on their business priorities.”

Media

executives have been hopeful that TCR would be ready for the new upfront advertising market in the summer 2017.

Brian Wieser, senior research analyst of Pivotal Research Group, adds: “In

whatever form it takes, we think Nielsen will still be regarded as the industry’s standard measure of video consumption, and that any flaws that may exist in the product at present will

eventually be remedied.”

Wieser adds: “Ultimately, the perspectives that matter most here are not those of the networks, but of agencies and marketers, and so far as we are aware,

few of those stakeholders have had significant exposure to the TCR product.”