At a time when the ad industry’s

dependence on data analytics and measurement technologies appears to be growing, the vast majority of advertisers and agency executives say they have little faith in the metrics they use to plan and

buy media.

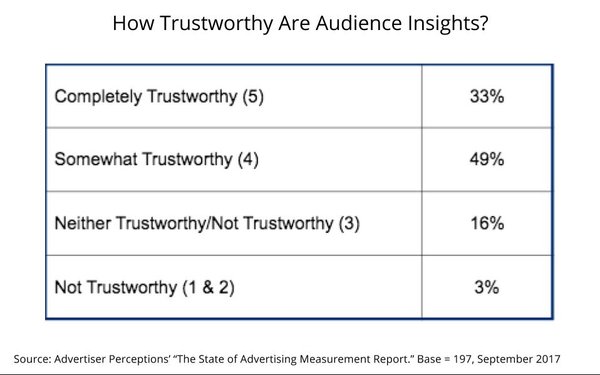

Only a third (33%) of ad execs say they consider their audience insights “completely trustworthy,” according to a survey of 197 advertiser and agency

decision-makers conducted online by Advertiser Perceptions (AP). The study, “The State of Advertising Measurement Report,” is intended to serve as a benchmark of industry confidence in the

data and measurement tools used to make media-buying decisions, which will be updated periodically -- most likely once a year

When AP drilled deeper, asking ad executives how

trustworthy they felt their “audience analytics/measurement” currently are, their confidence levels were even lower. Only 29% said they feel these currently are “completely

trustworthy.”

advertisement

advertisement

Interestingly, only a third (34%) said they felt Nielsen’s “C3” and “C7” audience ratings -- currently deemed a “gold

standard” of audience measurement by many in the industry -- are “completely trustworthy.”

The AP report does not break out the responses of advertisers versus

agency executives, but AP Vice President-Intelligence Justin Fromm says it was “pretty consistent all the way through.”

“What we see really is the fact that as we

move toward people-based advertising, there’s no question that the need for accurate measurement grows exponentially,” he said, referring to the “people-based" concept that seeks to

associate actual people exposed to advertising and media buys by correlating the “first-party” data that brands and agencies can access about them to the media they buy to reach them.

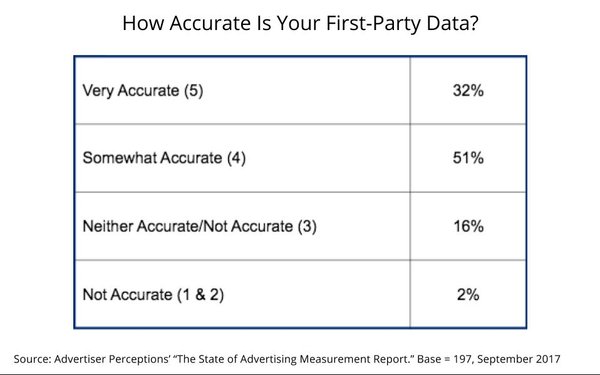

Interestingly, ad executives said they were equally dubious about the accuracy of the first-party data they use to power people-based marketing and media buys. Only 32% said this data is

“very accurate.”

“What we’re seeing is that if advertisers don’t trust the data they have in hand, it’s not easy for them to make those decisions

well,” Fromm says, adding that the lack of confidence may be stymying the industry’s adoption of more sophisticated audience analytics techniques and targeting.

Fromm

acknowledged that the trustworthiness of audience measurement and analytics is not a new issue, predating the current concerns that have surfaced recently about the accountability of digital audience

estimates. Still, this report does not seek to provide a “longitudinal” view looking back over time, he notes.

“There have always been questions,” he says,

adding: “This is intended to be a benchmark to look at things going forward.”