Facebook CEO Mark Zuckerberg’s major rebrand

to Meta appears to have media attention from some of its recent negative news, but the team at media watchdog

Media Matters for America are keeping the heat on the recent Facebook Papers expose,

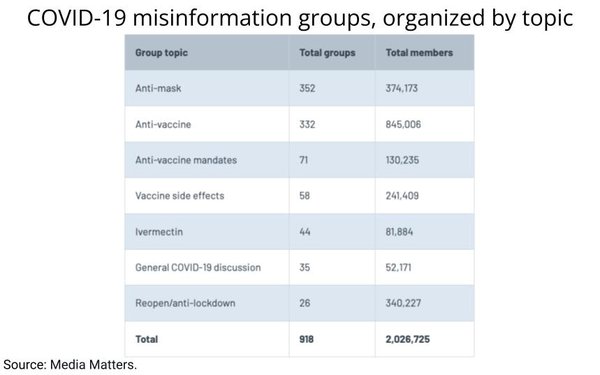

publishing an analysis of the 1,000-plus harmful groups built around COVID-19 and election misinformation still found operating on Facebook.

In a recent analysis,

Kayla Gogarty, an associate research director at Media Matters, reported the identification of over 1,000 active Facebook groups, with around 2 million combined members, pushing the spread of COVID-19

or election misinformation.

advertisement

advertisement

“We've been doing this work for about a year now,” said

Gogarty.

Specifically, in July, Media Matters discovered at least 284 public and private anti-vaccine

Facebook groups, with 520,000 combined members, regularly spreading vaccine misinformation and/or conspiracy theories.

“A week after that reporting, nearly 85% of the groups were still active,” the article states.

By August, they identified 171 more Facebook groups spewing anti-mask rhetoric and misinformation, with 200,000 additional members.

In September came another 60 Facebook groups, with 65,000 total members, promoting the use of Ivermectin as a

proper treatment for COVID-19. “These groups were filled with users asking where to acquire Ivermectin,” reads the article. By October 10th, 37 of the 60 groups were still active.

While Gogarty made it clear that “it's extremely problematic that all of these groups are still active on

the platform,” she said that this fact, unfortunately, wasn’t surprising.

“It’s

consistent with the pattern we’ve been seeing,” she stated. “Facebook isn't very clear or transparent about what that threshold is for actually removing a group. They usually say

that it has to have posts dedicated to misinformation, but a lot of times we don't see them removed.”

While group administrators and moderators are the

very users Facebook relies on in order to keep online communities in check, Gogurty found “a lot of administrators purposefully encouraging their members to use these evasion tactics...telling

other members to ‘use code words’ and ‘post in the comments’ of a regular post.”

“There's a lack of consistency across

the board in terms of enforcement,” added Gogarty. “A lot of the policies aren't completely sufficient but even the ones they do have they're not properly enforcing. If the groups are

specifically saying ‘the comments are less moderated, post your more incendiary content in the comment instead,’ that seems to be a pretty clear violation of Facebook

policies.”

Referencing Facebook’s actual power to act on harmful movements on the platform, Gogarty pointed toward their treatment of the rising

Patriot Party.

According to The Wall Street Journal's recent report, Facebook took an “ad hoc”

approach (as if “playing whack-a-mole”) when intervening with groups it deemed dangerous. In terms of diminishing the Patriot Party, Facebook made it purposefully more difficult for the

movement’s organizers to share the content and restricted the visibility of involved groups. One document included in the Facebook Papers said, “We were able to nip terms like Patriot

Party in the bud before mass adoption.”

With so many harmful groups still active on the platform, Gogarty believes Facebook’s “ad

hoc” approach is the very evidence showing that it does “have the tools necessary to deal with these problems; they are just choosing not to in a lot of these

cases.”

A recent Time article reported on

several comments included in the leaked documents that show employees’ attempts to crack down on these harmful groups: “why not disable commenting on posts altogether?”

On March 2, one Facebook employee wrote, “Very interested in your proposal

to remove ALL in-line comments for vaccine posts as a stopgap solution until we can sufficiently detect vaccine hesitancy in comments to refine our removal.”

Instead of taking

the suggestion seriously, Zuckerberg decided on March 15 Facebook would label some vaccine posts as safe. According to Imran Ahmed, CEO of the Center for Countering Digital Hate, “the move

allowed Facebook to continue to get high engagement—and ultimately profit—off anti-vaccine comments.”

“Obviously with COVID right now and

vaccine hesitancy, it has harmful real world impacts, which is a lot of times what Facebook says that they try to avoid,” said Gogarty. One example of this is the The Facebook Papers’

revealing spread of violent and hateful content in India, Facebook's biggest market.

“We want to make sure that people are aware that these groups still exist on the

platform despite all of what Facebook has said that they're doing,” added Gogarty. “We know that they have such a large user base which is part of why these problems are so huge. Facebook

usually puts profit over solving any of these issues; we've seen that happen time and time again. I would really like them to take a hard look at some of these groups and enforce their policies and

stop putting profit ahead of these issues.”