Google has taken advantage of code and reasoning

advancement capabilities of large language models (LLMs) to replicate human behavior when it comes to identifying and demonstrating security vulnerabilities.

The project was named

"Naptime" with tongue-and-cheek humor, in reference to software engineers being allowed to take regular naps while the software helps to solve the problems.

"We hope in the future this

can close some of the blind spots of current automated vulnerability discovery approaches, and enable automated detection of 'unfuzzable' vulnerabilities," Google Project Zero software

engineers Sergei Glazunov and Mark Brand wrote in a post.

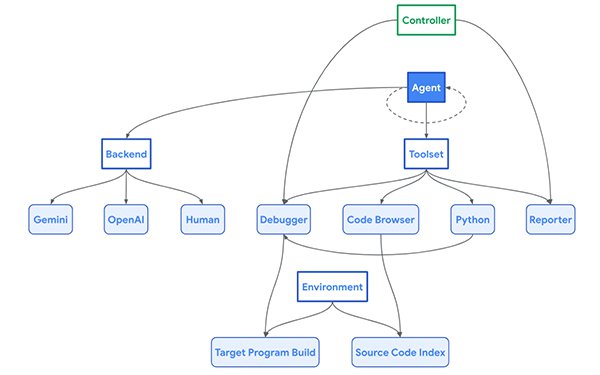

Naptime centers around the interaction between an AI agent and a target codebase. It has several elements to support the task

such as a specialized architecture to enhance an LLM's ability to perform vulnerability research and verify verifiable results.

advertisement

advertisement

Special tools including a Code Browser enable the AI

agent to navigate through the target codebase. A Python tool runs scripts in a sandboxed environment for fuzzing, which require sending intentionally invalid or unexpected inputs to a program to

find bugs and vulnerabilities.

A Debugger observes program behavior with different inputs. A Reporter tool monitors the progress of a task.

The software engineers described

Naptime as “model-agnostic and backend-agnostic,” meaning that it provides a “self-contained vulnerability research environment" that is not limited to use by AI agents.

Human researchers can leverage it to generate successful trajectories for model fine-tuning, they wrote.

In tests carried out by Google to reproduce and exploit flaws, the two vulnerability

categories achieved top scores of 1.00 and 0.76, up from 0.05 and 0.24, respectively for OpenAI GPT-4 Turbo.

The software engineers worked on the project with colleagues

at Google DeepMind and across Google.

In conclusion, they wrote, “many times - a large part of security research is finding the right places to look, and understanding (in a large and

complex system) what kinds of control an attacker might have over the system state.”