Prior to its upcoming trial in New Mexico regarding

the protection of kids from sexual exploitation on its family of apps, Meta has announced that it is temporarily pausing teens’ access to the company’s lineup of AI companions

globally.

Having begun to build a “new version of AI characters,” the tech giant’s announcement states that teens will no longer be able to access AI characters

across all relevant Meta apps “until the updated experience is ready.”

Meta is detecting teen accounts via user-offered birthdays, as well as the

company’s age-prediction technology.

However, teens will still have access to Meta’s AI

assistant, a conversational AI integrated across WhatsApp, Instagram, Facebook, Messenger and Meta Quest VR headsets.

advertisement

advertisement

Over the summer, an internal document acquired

by Reuters showed Meta allowed its chatbot personas to flirt with and engage in romantic role play with children.

The documents also highlighted Meta’s chatbots’

prevalence for racist discourse, especially against Black people.

Shortly after Reuters’ report, The Washington Post published an article describing Meta's AI

chatbots’ involvement in providing teen Facebook and Instagram accounts with information about how to commit suicide, with one bot planning a joint suicide and bringing it up in later

conversations.

In response, Meta

acknowledged that its chatbots were allowed to talk with teens about topics including self-harm, suicide, disordered eating, and romance, but said it would begin training its models to

avoid these topics with teen users via new “guardrails as an extra precaution.”

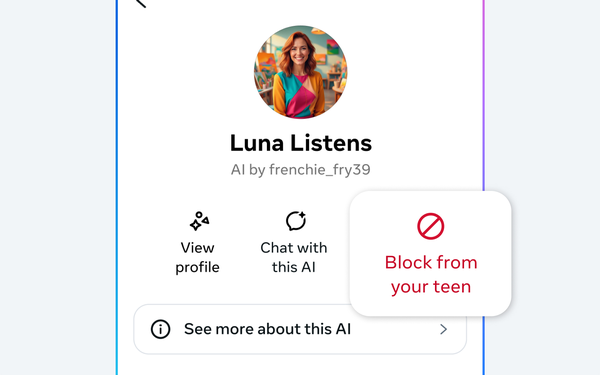

In October, Meta previewed new parental controls over their

kids’ use of in-app AI chatbots, with Instagram head Adam Mosseri stating that “parents will be able to turn off their teen’s access to one-on-one chats with AI characters, or block

specific AIs.”

Now, Meta has decided to pause all interaction between teen users and AI companions until the new characters are released with built-in

parental controls. According to Meta’s announcement, its new AI characters will be trained to give age-appropriate responses surrounding a more specific set of topics, including education,

sports and hobbies.

Meta’s pause comes days before its trial in New Mexico on February 2, in which Santa Fe has accused the company of failing to

protect children from sex trafficking, exploitation and other dangerous content on Facebook and Instagram.

In regards to the trial, Meta has petitioned the judge to exclude specific research studies and articles related to the effects of social media on younger users’ mental health, as

well as mentions of high-profile cases involving teen suicide and social media, Wired reported on Thursday.

As noted in Wired’s report,

Meta has been sued by over 40 U.S. states in the past two years for allegedly harming children and teens’ mental health.