Meta may have ambitions

of using the metaverse to become “the world’s discovery engine,” but Google is developing new generations of its core search app that will enable people to annotate the real world

“any way and anywhere” they want, a member of its technical staff revealed during a keynote presentation at the Cannes Lions this week.

Noting that in the 24 years Google

has been organizing the world’s information, the volume and variety of it has increased from about 25 million web pages to hundreds of billions, Pandu Nayak said that 15% of the search queries

the company sees every day are ones it has never seen before, and that has led it to develop new ways of enabling people to “find answers to previously unaskable questions.”

More

importantly, he showed how new and soon-to-be released versions of search queries labeled “multisearch” will fulfill Google’s “any way” vision.

advertisement

advertisement

To

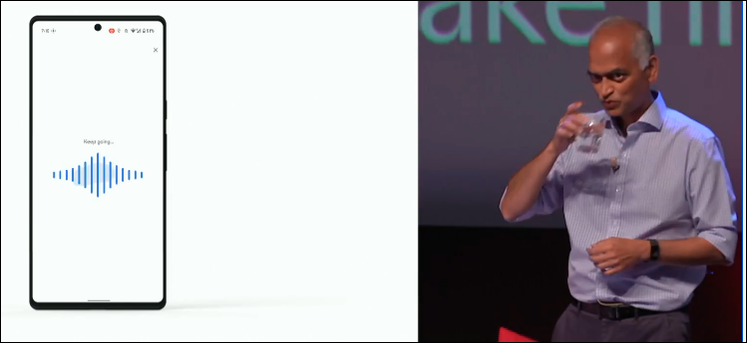

illustrate that, Nayak brought one of the new features – “hum-to-search” – to life on stage in Cannes’ Palais with a little help from his daughter.

Noting that he personally is unable to carry a tune, Nayak used a recording of his daughter humming a song to demonstrate it in real-time. After his daughter hummed a few bars of a song,

Google’s engine discovered it was Billie Eilish’s “Ocean Eyes,” which launched on the Palais’ audio system and was accompanies by live musicians in attendance.

Nayak said the feature already is used more than a hundred million times

every month, and is part of a series of new “multisearch” functions Google has been developing and refining, and expanding into multiple countries, languages and cultures to enable the

“anywhere” vision for anyone.

He demonstrated how multisearch’s ability to combine Google Lens’ visual search capability with text queries is enabling new

forms of product discovery by taking a photo of a red shirt worn by one of the Palais’ musicians and adding the text query “blue” to find versions in a color to his liking.

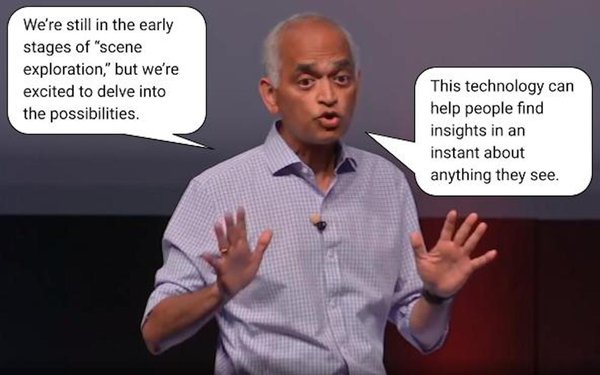

He then demonstrated Google’s latest multiearch function – dubbed “Search Scene” – which enables users to simply scan any scene in the real world, including a

store shelf, and immediately annotate all of the visual objects in it, including products that could potentially be purchased in real-time.

He described the features, which were

launched in April in the U.S., as “one our most significant updates in search in several years,” adding: “Now you can take a picture and ask a question at the same time.”

The next iteration, a “multisearch near me” feature

enabling users to find what they’re looking for from local merchants, will be available globally later this year in English and will be updated with more languages over time, he said.

“It will work for everything from apparel to home goods to my personal favorite, finding a coveted dish at a local restaurant,” he said, adding that the new features, including

“Search Scene” will “help people find insights in an instant about anything they see.”