The cost of a "query" in an enterprise chat system usually gets bundled

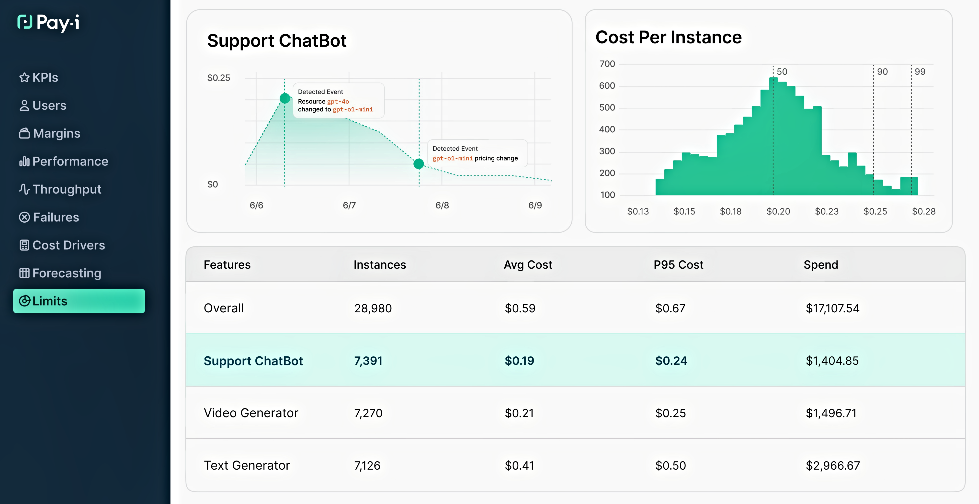

into the overall pricing model rather than being a per-question fee, but three former Microsoft employees have built technology that breaks out the numbers to determine budgets, performance, user

metrics, return on investments (ROIs) and more.

Pay-i — founded by David Tepper, Doron Holan, and Erik Winters in 2023 — stands at the intersection of finance and artificial

intelligence (AI) to help companies understand how much the technology really costs them to use.

Now the startup, which launched in May, is working on solving the problem of

how much a chat costs and whether it is worth it.

advertisement

advertisement

One line of code calculates margins or ROI. It answers financial questions that companies ask, although enterprises are mostly using the

technology in beta.

Developers will insert a couple of extra lines of code to help understand how much each chatbot query and response costs.

Some enterprises use more than one

chatbot brand, such as OpenAI, Anthropic, Google or Microsoft.

“OpenAI will release a new update that literally overwrites the model you were using,” said Pay-i CEO Tepper.

“It's making new decisions -- it's chatting differently to the end user.”

Pay-i can tell the business if the new model improves, becomes more or less expensive, run faster or

slower, and whether it crashes more often. The technology helps the company understand costs, KPIs, and what’s that worth to the business, especially if they are using more than one GAI

model.

Through code, which is sometimes one line, Pay-i connects GAI with specific business outcomes such as revenue growth, customer satisfaction, and operational efficiency, tracking and

forecasting budgets and how much is being spent.

Enterprises have been using the technology in beta, but Pay-i for the past 11 months has been working with Stanford to resolve a problem that

has not yet been resolved in academia, Tepper said.

“Once that is solved in academia, then we'll be the first ones out with a product that can do that,” he said.

Tepper

said the code will understand how clients intend to use GAI, as well as which models, and “tell them, ‘hey, don't use Model X, use Model Y” because if used this way’,” it

will cost less, become faster, and be prone to less errors, Tepper said.

After having the opportunity to work on an early version of ChatGPT, Tepper immediately realized the world would

change. He met up with some friends and decided to build apps, to enjoy the experience of doing it and hopefully to make money.

This resulted in nine app prototypes, but none made sense,

because the “unit of economics for delivering on any of these AI tools meant you couldn't ship an app for 99 cents and then hope to make money from it,” he said. “You couldn't back

it by advertisements because they don't bring in anywhere near enough money for you to make a profit off of these things.”

After continually running into that economics issue, the three

co-founders decided to solve that problem. It would soon become a problem every company would face.

Pay-i recently announced $4.9 million in seed funding to expand its operations and further

develop the platform.

Tepper spent about 19 years at Microsoft and co-led Azure's internal GAI consumption strategy. His first GAI patent, GenAI, dates back to 2011, and has briefed

Fortune 500 boards, universities, members of Congress, and UN delegations on AI economics.

He said OpenAI’s ChatGPT o3, one of the more iconic models, has been most powerful until Google

Gemini came along.

Tepper used ChatGPT o3 to demonstrate how the technology pulls in information and considers what to serve as results, but said this OpenAI model is no longer state of the

art because technology is moving so fast.

“Gemini from Google is state of the art,” he said, as he used ChatGPT o3 to provide a demonstration of how the technology analyzes

different websites and pulls in data.

Tepper worked a little on Microsoft Copilot while there.

“I can tell you why it's not that good,” he said. “It's really

because Microsoft is utterly petrified of any kind of AI blowback” by the possibility of getting something wrong. He called the models “over constrained.”