I'm not sure exactly when, but I'm pretty sure AI has already

passed the Turing Test. The big question now is how that will test us as we increasingly reside in an AI-generated -- or augmented -- world.

Whenever I have an opportunity to speak to a legit expert on that subject, I usually ask the same question: How will we know when AIs are gaming us?

By that, I mean when humans can no

longer distinguish between the AI interface and the foundational way AIs think and render output that leads us in directions we wouldn't otherwise be prone to go.

And I'm not talking about

superintelligence, but in the baby step progression from our earliest roots in machine learning to the current and not-too-distant future of AI interfaces and how they influence the ways we experience

the world.brand

advertisement

advertisement

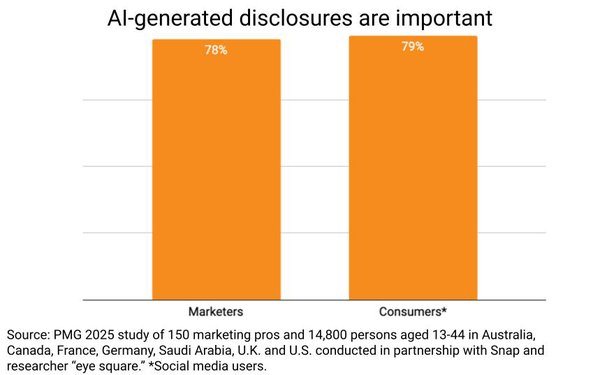

Some new research published by PMG and Snap late Tuesday morning suggests that's already happening with the vast majority of consumers exposed to AI interfaces, and that a

similar percentage of both consumers and marketers are growing increasingly wary about the need to disclose if and when AI is in fact gaming us.

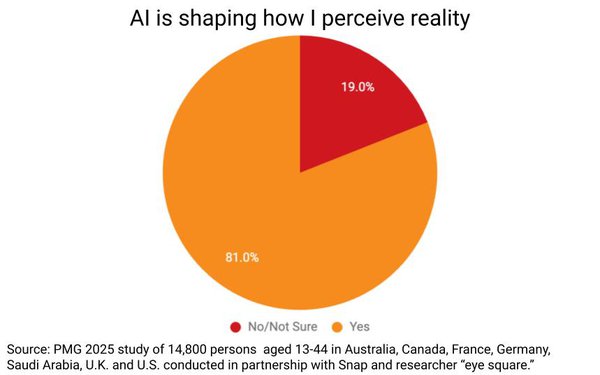

The study, which was conducted by researcher

"eye square" surveyed 14,800 people and found 81% already believe AI is shaping how they "perceive reality."

On the bright side, the stat shows that a critical mass of humans at least have

grown cognizant of that fact.

The study, which can be read in its entirety on Snap's blog here,

focuses mainly on the positive and negative lifts that occur when consumers are exposed to ads generated or augmented in some way by AI, which is something we will obviously need to pay more attention

to as the art, craft and science of AI-influenced advertising continues to evolve.

Personally, I think we are very close to AI-generated advertising passing its own equivalent of the Turing

Test, if it hasn't done so already. Most of the "uncanny valley" stuff has already been adjusted for, and the main issues come down to whether renderings create a sense of disbelief, skepticism or

make the hairs tingle on the back of your neck.

I, for one, have responded by becoming increasingly skeptical about the veracity of everything I now see rendered on a screen -- not just

because I'm prone to paranoia, but because I'm trained as a journalist to be skeptical of things to begin with. I'm not sure average people are, so we're in a dangerous slippery slope period of

digital content, in which I think it's very possible people will just stop believing in anything they see on a screen.

This, obviously, would not be a good thing for advertisers and agencies

that depend on rendering messages on screens to influence how people feel about brands.

It's actually the same concern I raised during the earliest days of "native advertising" content -- not

just that consumers wouldn't be able to tell the difference between objective news and information-based content vs. brand-created posers, but that once they can't they would just stop believing in

everything they read.

I kind of feel that has already happened to some degree, including for reasons that have nothing to do with advertising and marketing, but that native advertising

certainly hasn't helped.

It's the same thing with AI, but maybe on steroids.

Anyway, the most important finding of the new PMG/Snap research is that both consumers and brands surveyed

believe disclosures are imperative in an AI-generated world.

The problem, we all already know, is that it will be something championed by the best actors, and the reality of the world we now

live in -- or at least the one we perceive we live in -- is that it is increasingly comprised of bad actors too. And I don't know what the solution is for that.