A new financial "risk" has begun showing up in the most recent

annual reports and financial filings that some of the world's biggest advertisers have been making with the Securities & Exchange Commission, and it's giving some analysts pause.

Not

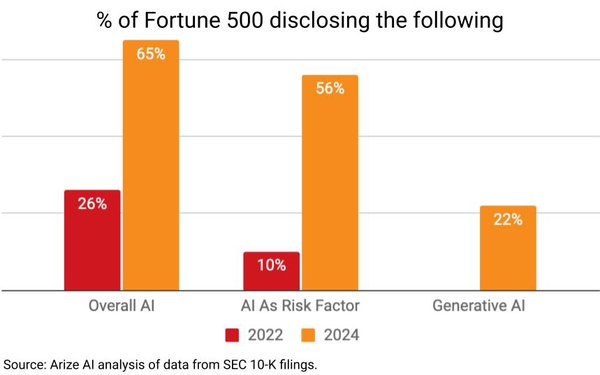

surprisingly, the "risk" is the very same one that has begun transforming big agencies and others: artificial intelligence. According to a new study published by Arize AI, a platform tracking public

disclosures by big businesses, 56% of Fortune 500 companies cited AI as financial risk in the past year. That's up from just 10% in 2022.

"For media companies, in particular, legal uncertainty

around intellectual property as it relates to generative AI is a commonly cited concern," explains Arize AI CEO Jason Lopatecki.

"Ultimately, we believe advertisers and media companies will

embrace and see more upside leveraging generative AI," he adds.

advertisement

advertisement

Arize AI evaluates a company's potential applications to ensure that models are deployed in a way that ultimately benefits

them, while using guardrails to protect their interests and those of their clients.

Many believe that open-source projects can remove the risk for AI security, but that’s not the

case with Melissa Bonnick, executive director, head of programmatic at JPMorgan Chase & Co.

In a keynote presentation at MediaPost's Data & Programmatic

Insider summit Thursday, Bonnick said JPMorgan does not use open-source products, but rather pulls the coding in-house to ensure the company is methodical with updates.

“Programmatic is called trading for a reason, so we have the ability to look at what our financial traders are doing to see how we can pivot that and use it for ourselves,” Bonnick

said.

Frustrations arise from efforts to remain “agile” and “safe” because developers have a list of services they want to implement using AI, but “we have to

make sure to keep the firm safe," she added.

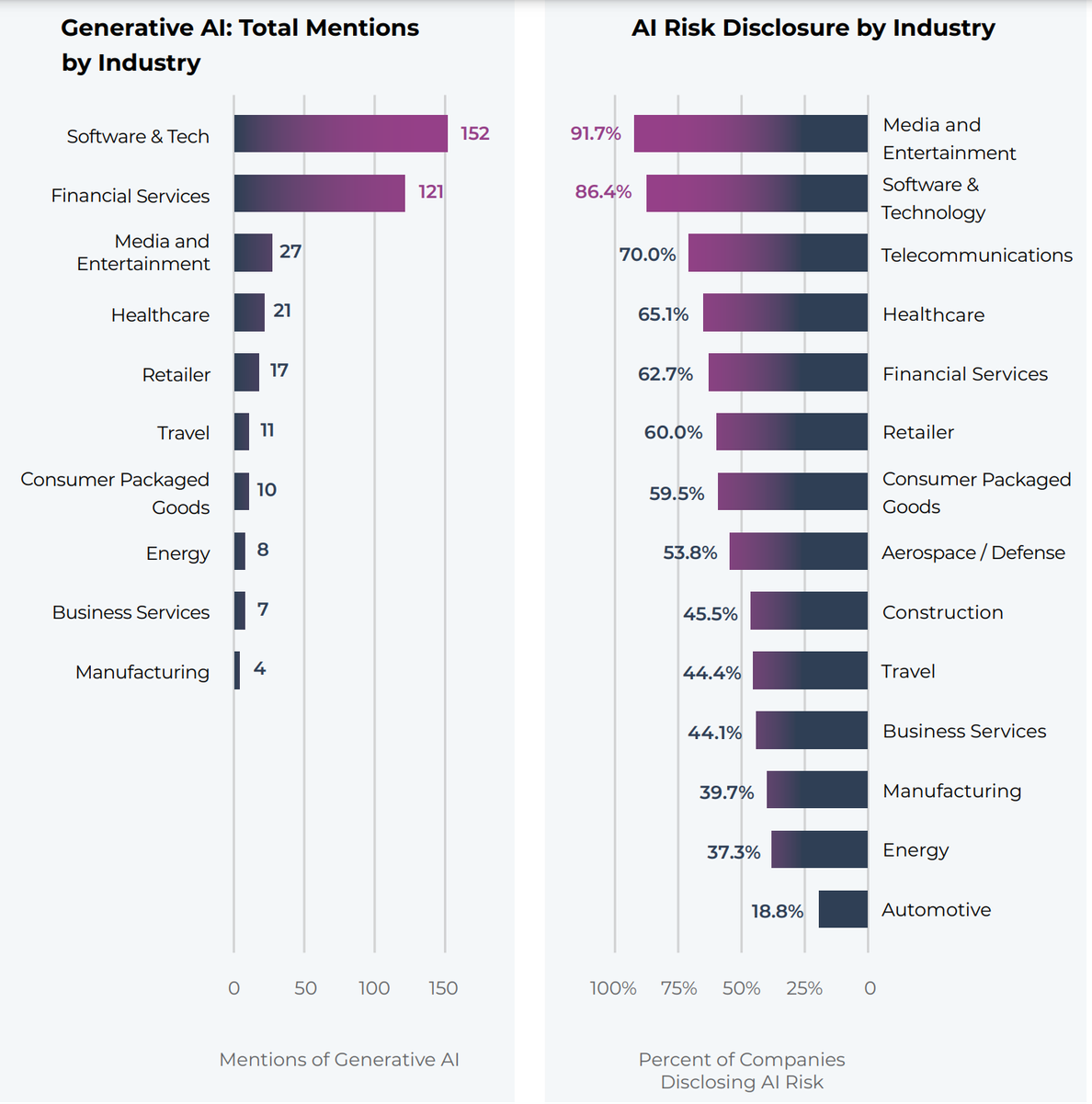

More than 90% of the largest U.S. media and entertainment companies and 86% of software and technology groups have said AI systems were a

business risk, according to the report issued by Arize AI.

For example, Warner Bros. Discovery notes that "…new technologies such as generative AI and their impact on our

intellectual property rights remain uncertain."

Disney warned that “rules governing new technology developments” such as GAI “remain unsettled” and could rattle its

existing business model connected to revenue streams for the use of its intellectual property and how it creates entertainment products.

GAI supports opportunities, but only 33 companies of

the 108 that specifically discussed generative AI identified it.

Efficiencies, operational benefits and accelerating innovation are some of the ones cited in their annual reports. Then there

are reputational or operational concerns, such as becoming involved in ethical issues such as employment, privacy and human rights.

Risk has surfaced in regulatory issues that will increase

costs. A recent KPMG survey of 225 senior business

leaders found that 54% expect AI regulation to increase organizational costs.

The key areas of focus for risk mitigation are cybersecurity and data quality. Some 56% consider risk-mitigation

implementation to be of high significance in GAI. Some 29% cite data-privacy compliance, while 13% cite regulatory uncertainty and 11% cite lack of security and safety.

GAI also has pushed the

question of “risk” to the forefront of climate change and up to partnerships and thinkers. Futurist Ray Kurzweil is the author of the nonfiction book The Singularity is Nearer,

published in June.

The book is a sequel to The Singularity Is Near: When Humans Transcend Biology, which published in 2005.

Kurzweil takes a new perspective of human

advancements toward singularity. He believes humans will enhance their abilities through technology by merging with machines, and predicts that AI will become self-aware by 2045. He predicts this by

examining the exponential growth of technology and suggests how AI will leap from transforming the digital world to changing the physical world.

Think of it this way: that humanity will find a

way to gain and retain all the sustainable energy required to stop global warming, but in the correct way, without being forced. The singularity will help to find ways to use renewable sources by the

early 2030s.

GAI energy consumption is expected to increase, and that could become one of the risks for advertisers as they try to attain carbon-neutral status. The advancement is seen in

Microsoft Founder Bill Gates’ financial backing of a new type of nuclear

energy plant in Wyoming.

AI processing power requires an exorbitant amount of energy. High-performance AI chips like Nvidia's B100, for example, can consume 1,000 watts of power, while its

previous models such as A100 and H100 consume 400W and 700W, respectively.

In the Arize study that highlights risk factors in SEC filing, only 30.6% of companies that mention GAI in

their annual reports cited its benefits or said they use GAI outside of the risk-factor section, according to the report.

While many enterprises show more caution by mentioning even the remote

possibility of AI risks for regulatory reasons, in isolation such statements may not accurately reflect an enterprise’s overall vision -- thus creating an opportunity.

Source: Arize AI.