OpenAI released its ChatGPT

autonomous agent in mid-July capable of completing complex multi-step tasks using its own virtual computer environment.

Now one data platform provider has managed to identify it in the

wild.

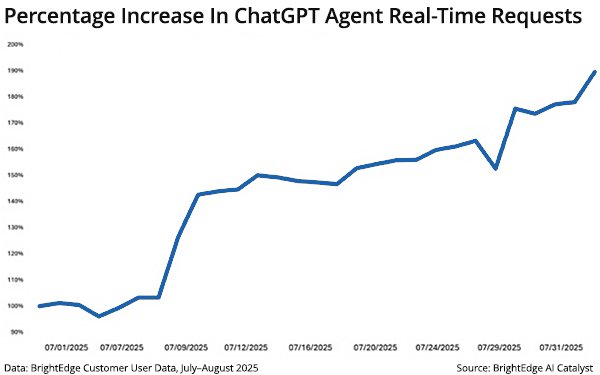

“That live user -- the agent -- is here and it's growing massively,” said BrightEdge CEO Jim Yu.

In addition, the traffic from the agent has doubled in

the first month, he said.

ChatGPT’s agent already represents roughly the equivalent of 33% of the same level of visits as users from organic search.

The agents are visiting

websites for brands about one-third as much as users do from organic search.

“That's a massive amount of activity that the agent is already doing right now,” Yu said.

Yu

explained the implications for marketers and offered some advice. He said a conversation he had with CMOs in the past 18 months mostly centered on whether they should allow these AI bots to scan their

websites, allow them to index the content, or block them.

advertisement

advertisement

“Not only do you not want to block them, you want to put out a welcome mat,” Yu said. “These agents are not like

yesterday's bots.”

These agents research in real-time on behalf of an individual searching for information like a human assistant might do, only faster.

“You definitely

don't want to block these things because, in the future, this is how people will have an AI assist shop for them, determine what to purchase, and where to go for lunch,” Yu said.

Agent-assisted activities will do things like browse websites and content and compare options. Marketers need to know what users want and make it easy for the agents to find the information.

“Unlike classic search bots, which are very resilient, meaning they come back in batch mode -- they're sophisticated. They render pages, understand code and JavaScript, even read PDFs. These

AI bots are very performance sensitive,” Yu said.

AI agents do not render code, so they don't understand JavaScript. They are performance sensitive, meaning the system, application, or

process is highly dependent on speed, responsiveness, and efficiency to function correctly or deliver a satisfactory user experience.

If a platform or a website doesn’t return

results, the agent moves on to the next site because it's providing information to the user in real time.

The threshold for how quickly the agent must respond is much faster than traditional

search models that must build an index and serve from an index.

Yu said this responsiveness requires the next generation of analytics. These agent bots do not interpret JavaScript, which means

the infrastructure of analytics for the past couple of decades that relies on JavaScript does not trigger results. The data is invisible to the current generation of analytics.

A new form of

analytics is required that can identify the agent. The platform analyzes the log files because that is where marketers can see these bots.

Yu said the agents are identified in the logs as a

"ChatGPT user." That specific user agent is the ChatGPT live agent visiting the website on behalf of a user.

OpenAI has a different crawler, which is not just getting data from Bing, but has

its own ChatGPT web crawler. This is different than the agent.

Yu said that when analyzing the data, he can see that the web crawling also now matches Google's desktop crawling.

It has

become very clear that OpenAI has been building its own index for web crawl as well, Yu said. This is different than Common Crawl, which is an index that is open-source or open to everyone.

OpenAI ChatGPT is directly doing live crawls. That is beyond the agent piece — two very different aspects.