AI agents are increasingly becoming the

operational layer that connects businesses and consumers. That is why Google is working on a real-time streaming architecture as the next step for multi-agent systems.

This will

allow autonomous AI agents to act as personal shoppers on behalf of consumers, and to carry out business tasks and transactions.

Some of the hurdles being addressed by Google

include handling and storing the continuous flow of data in real-time, rather than in sequential turns. Taking turns means that one system sends data and the other responds, and there is no time

for that in an autonomous transaction.

Bidirectional streaming can help to solve this latency problem and others, says Hangfei Lin, Google tech lead for agentic systems.

The company's

developers wrote in a blog post that it is building an architecture to allow two companies to transmit data continuously and independently of each other, so the systems can communicate between two

connected endpoints -- typically a client and a server. This type of technology has been used for live chat, video conferencing, collaborative tools, and IoT devices.

advertisement

advertisement

Now Google will use

bidirectional streaming for AI agents, so people can carry out tasks online or make purchases without human intervention.

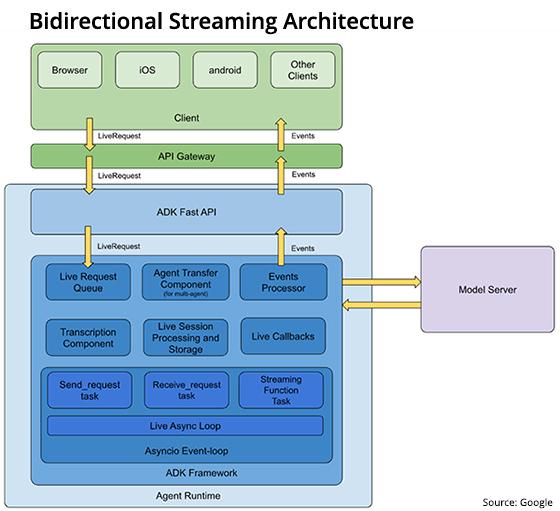

A post from Thursday details how the Agent Development Kit (ADK) is designed to address this issue through what engineers call a "streaming native-first approach."

Companies

like Microsoft, Adobe, Salesforce and Oracle are developing agents for business software, and large corporations like eBay and Uber are using them to streamline marketing campaigns. Google is working

to make autonomous transactions possible.

The vision is to change from "turn-based transactions to a continuous bidirectional stream." This will enable Google and others to build a "new

class of agent capabilities that feel more like a collaborative partner than a simple tool."

In a streaming architecture, the agent processes information and acts while the user is still

providing input.

For example, the user can tell the agent they want a blue dress and in the middle of the transaction, ask the agent to change the color to pink, and the agent can

instantly stop its current action to address a new user input.

Tools also will no longer be limited to one request-and-response per cycle. A user can redefine the request in the background as

the request is processed and information is sent back to the user.

A multimodal streaming architecture that accepts all types of inputs from phots to text can solve this by natively processing

continuous, parallel streams as one context.

This architectural approach is "what unlocks true environmental and situational awareness, allowing the agent to react to its surroundings in

real-time without manual synchronization," Lin wrote.

Challenges still remain, and the most fundamental is that the concept of taking a "turn" disappears.

In a continuous stream,

developers must design new tools to segment the stream into logical events for debugging, analysis, and resuming conversations.

Developers must determine how to store a continuous stream of

context packaged and transferred to another agent when there is no clear "end of turn" -- the signal to trigger the handoff in data and process.

When the turn ends, so does the process,

which is then handed off to the next technology or process.

Imagine a system not knowing when the next process has its turn. This is what developers are dealing with, but this is how

advertising is evolving into agentic.