The word

“slop” and its meaning have made a major transition since it first appeared in the 1700s as a definition of soft mud.

Now, "slop" is defined by the publisher Merriam-Webster to

describe low-quality digital content produced by artificial intelligence (AI), prompting its human editors to name the concept as the word of the year in December 2025.

“When people say 'AI slop,' nine times out of 10 they are referring to clearly distorted images like when a person has six fingers instead of five,” says Sean Marshall, co-founder and

COO of AI platform and company Copley. “It could even be the color contrast of their skin.”

There are plenty of other meanings for "slop," and all revolve around the quality of

data used to create AI content, images and videos.

advertisement

advertisement

Marketers are drowning in fragmented signals from large language models (LLMs). The models break down the data, process it and use the

information to represent content in images and videos. While the data can help build realistic images, the "wrong" data can produce slop.

“It takes a lot of prompting and

data to create a natural image of a person,” Marshall says.

Copley was

designed to automate content creation for marketing. On Tuesday the

company launched a marketing agent that uses performance data to create ads in real-time based on conversations between a human and an agent.

It was developed to rid the content of AI-based slop

by monitoring the quality of the data and information fed into the LLM.

Copley's AI-based agentic agent builds ads, runs reports, and analyzes performance. It is accessible from either

Copley’s platform or Slack via typing in text or verbally. It can run reports and analyze performance to determine what works or what does not.

The AI Creative Report provides marketers

with analysis of the ad, including distinctive features that are effective or not.

Analysis is tied to performance data, because without it, Marshall says, results can lead to subjective

decisions, delays in creative work and missed opportunities -- in other words, slop.

Kapwing, an online creation and editing platform, analyzed the top 100 YouTube channels in every country and found that 21% to 33%

of YouTube's feed may consist of AI slop or "brainrot" videos. The findings in the study were released in November 2025.

It took Copley's engineers about two years to

build the platform, according to David

Henriquez, co-founder and CEO at Copley.Henriquez described how agents use “skills,” a reusable package of instructions stored

in a markdown file -- a structure recognized by Anthropic’s large language model (LLM), Claude.

Reason models are now smart enough to combine skills and tools to create the output the

content creator wants.

The LLMs are optimized for "good" and "bad" content, so they understand how to respond.

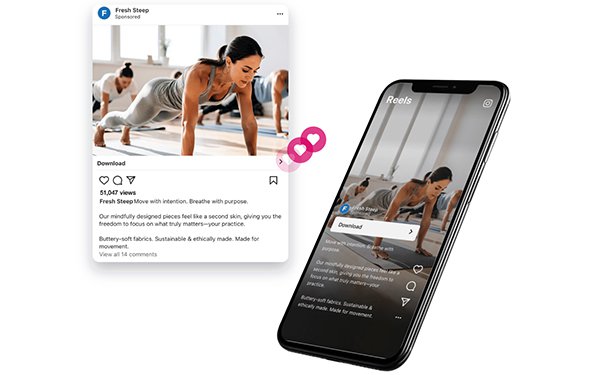

Using Meta Platforms as an example, the Copley agent integrates

skills, tools, and data to generate content and publish it on Facebook via an API. The human collaborates with the agent in natural language, voice or text, as the agent researches the accounts,

creates ad variations, explains its choices, and launches ads.

“That’s the future,” Henriquez said. “We are focused now on advertising, but the plan is to

go into other parts of marketing.”

The platform was built to analyze and handle creative production work, not to replace someone’s creative judgment.

It operates in Slack,

where teams already work and collaborate. The creator can press the audio button in Slack and talk to the platform or type the requests.

The request can be as simple as “Hey Copley, I

want to create some ads for a product that will launch next week.”

The agent will ask questions such as “do you have new images or search existing images, or search your Shopify

product catalog? How do you want me to get started?”

It will also ask the creator whether he or she has ideas on how the ads should look, or the agent can suggest what they think will

work best based on specific data or input.

As AI images and content gain momentum, synthetic slop seems to have more permanence and may have harmful effects on advertising and brand

safety unless marketers set up human-controlled guardrails, which is what Copley is trying to do.

Copley seeks user approval before publishing ads or content. Creators must click an approve

button, at least for now.

In the future -- not single-digit-years -- but long term, this will change, according to Henriquez.

“Once people get more familiar with this process,

they will have agents that go out and do things for them,” Henriquez said.