Google's Advanced Technology and

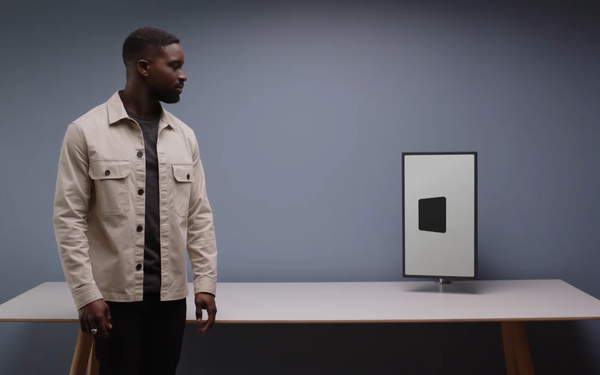

Products (ATAP) division has spent the past year exploring how computers to use radar and

advanced machine learning to understand the needs or the intentions of humans, and then react appropriately. The technology gives devices "the social intelligence" to interact with humans without

being too intrusive.

The technology does not rely on a camera to monitor what someone does. It uses radar.

The Soli sensor, which Google has used in other products, now is being

used in this new research. Rather than use the sensor to control a computer, ATAP is using the sensor data to enable computers to recognize human movements.

advertisement

advertisement

The technology is controlled by the

wave of a hand or turn of the head. The inspiration came from how people interact with one another.

“We pick up on social cues -- subtle gestures that we innately understand and react

to,” Leonardo Giusti, head of design at ATAP, said in a video. “What if computers understood us this way?”

It’s about understanding subtle body language. For example, a

video will stop playing when the person watching it walks away from the screen and then picks up when the person returns within viewing range.

Giusti told Wired, which initially reported on

the technology, that much of the new research is based on proxemics, the study of how people use space around them to mediate social interactions. It turns out that people and devices have their

own concept of personal space.

It’s not the first time Google has used radar before, according to Wired, which first reported the technology. Google in 2015 unveiled Soli, a sensor that

can use radar's electromagnetic waves to pick up precise gestures and movements.

Google used it in its Pixel 4, giving the phone the ability to detect simple hand gestures, so the user could

use hand gestures to snooze alarms or pause music without having to physically touch it.

Radar sensors also were embedded inside the second-generation Nest Hub smart display to detect movement and breathing patterns of someone sleeping next to it. The device could track

the person’s sleep without a smartwatch.

Using radar comes with challenges. For example, while radar can detect multiple people in a room, if the subjects are too close together, the

sensor just sees the group of people as a blob.