I can’t recall the first time I heard the

term “synthetic media,” but I’m hearing – and thinking – a lot about it lately, because the proliferation of generative AI tools is, well, proliferating it.

How much? I’m not sure anyone knows for sure, but some new data suggests it is accelerating fast.

Using AI-generated news sites as a proxy, they appear to be doubling

every couple of weeks, according to initial benchmark data published by NewsGuard, which began tracking them in May.

And they’re

just getting started.

How much AI is synthesizing the rest of our media content probably is an even more unanswerable question, but based on what I saw during the Cannes Lions last

week, it clearly has taken hold of the ad industry.

advertisement

advertisement

In the best use cases, top ad execs gave many examples of how they have been using AI to help generate big ideas, but that it

requires humans to identify which of the AI-generated concepts actually are meaningful.

In that context, AI truly is just a tool assisting humans conceptualize broader swaths of

possibilities. That was the way Omnicom digital chief Jonathan Nelson first explained the holding company’s development of its OMNI AI platform, and it’s the one that makes sense to me:

Using AI to help people think of and explore lots of new possibilities at hyper speed.

The bad use cases are the unintended consequences -- like the instantaneous proliferation of

bogus news sites that NewsGuard has begun tracking -- as well as the proliferation of synthetic content throughout social media, search, and ultimately, the interpersonal kind we all share with each

other.

Deep fakes capable of passing modern-day Turing tests may seem like the most extreme examples, but I’m more concerned by the gradual, ongoing blurring of man-made vs.

synthesized information over time.

Don’t get me wrong, people have been creating bogus content as long as people could create content, but in the analog days it was easier to

at least detect the source, if not the truth.

Digital accelerates the ability to alter and synthesize information in ways that have concerned me long before the proliferation of

generative AI technologies.

When I asked a top

search exec how much of the world’s information had been indexed online in 2011, he estimated it was just 5%. I don’t know what it is today, but the real point isn’t how much

information has been indexed, but what percentage is accessible via digital media, because that is the predominant way people access information now.

Last week, Elon Musk tweeted a

meme that illustrates this point in an even more profound way, noting that “only 1% of ancient literature has survived.”

Clearly, history has always been written by the victors, but what happens when the

victors are machines synthesizing information that distorts reality in ways that are no longer human?

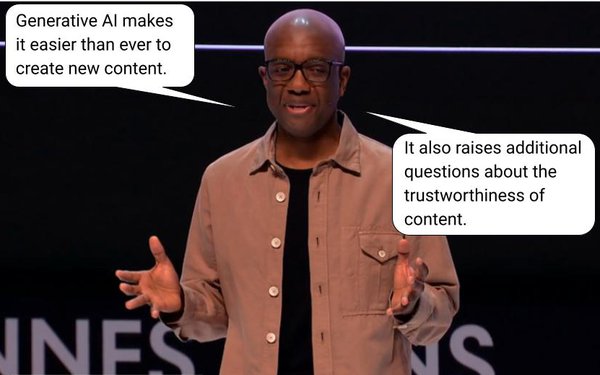

“Generative AI makes it easier than ever to create new content,”

Google Senior Vice President-Research, Technology & Society James Manyika said during a keynote presentation about good and not-so-good uses of generative AI at the Cannes Lions last week.

“It also raises additional questions about the trustworthiness of content,” he added showing images of social-media content such as articles about how the 1968 moon landing was

staged.

“That’s why we’re rolling out tools like ‘About this image’ in our search, which provides important information such as when and where similar

images may have first appeared," Manyika said, adding: "Where else the image has been seen online, including news, fact-checking and social sites.

Manyika said Google also has pledged to take

other steps -- including digital watermarking and embedding indelible metadata identifying sources -- directly into synthetic content to enable uses to determine the content and truth of what they are

seeing.

“In the end, we believe building AI responsibly must be a collective effort involving researchers, social scientists, industry experts, governments and everyday people,

as well as creators and publishers,” Manyika concluded.

I’d like to add advertising execs to that list, because one way or another, their ad budgets are either

underwriting or directly sponsoring much of the synthetic content, even if they aren’t fully aware of it.

According to an in-depth analysis of programmatic advertising released

last week by the Association of National Advertisers, blue-chip advertisers currently spend $13 billion annually advertising on bogus “made-for-advertising” websites.

A

new report released by NewsGuard this morning identifies 141 brands that are feeding programmatic ad dollars to low-quality AI-generated news and information sites operating with little to no human

oversight (see related story here).