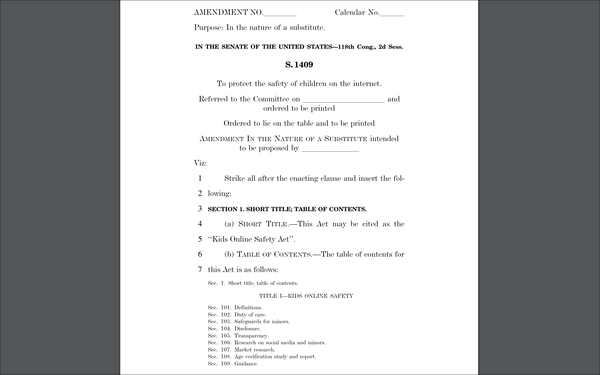

A House Committee on Wednesday advanced the controversial Kids

Online Safety Act, which would regulate how social media platforms display material to minors under 17.

The proposed law, which the Energy and Commerce committee passed by a voice vote, aims

to protect young people from harms that some observers have associated with social media use.

The current House version of the bill, which was revised shortly before the vote, would require large platforms to

implement design features (such as notifications, automatic scrolling and personalized recommendations) with an eye to preventing a list of specific harms.

That list includes physical violence

and harassment, sexual exploitation and abuse, and the “promotion of inherently dangerous acts that are likely to cause serious bodily harm, serious emotional disturbance, or death.”

advertisement

advertisement

That revised version would appear to impose a narrower obligation on technology companies than the Senate bill, which was passed 91-3 earlier this year.

The Senate's iteration of the law

would require platforms to use reasonable care to avoid causing a broader range of harms -- including anxiety, depression, eating disorders, substance use disorders, and suicidal behaviors -- via

design features.

The bill's ultimate fate remains uncertain.

Ranking member Rep. Frank Pallone (D-New Jersey) said Wednesday he couldn't support the bill in its current form.

“I obviously firmly support protecting kids online, but I have serious concerns that this bill will not have its intended effects, and may have unintended consequences,” he said.

Pallone also said he was concerned that the last-minute amendment prevented the public from having a chance to weigh in on the proposed law.

Pallone wasn't the only lawmaker to express

concerns about the bill. Several House members criticized the new amendment Wednesday, arguing that it weakened the measure. Others who objected to the bill said its language was ambiguous and likely

to face court challenges.

The committee also advanced by voice vote the Children and Teens’ Online Privacy Protection Act, which would prohibit websites and apps from collecting personal

information -- including data stored on cookies, device identifiers and other pseudonymous information used for ad targeting -- from teens between the ages of 13 and 15, without first obtaining their

explicit consent.

That bill would also restrict some forms of marketing to children and teens, but would allow companies to serve contextually targeted ads to young people.

The youth

advocacy group Fairplay -- which supports both bills -- cheered Wednesday's vote as a “step forward,” but added that the Kids Online Safety Act has not yet been finalized.

“We will continue to work with House and Senate leaders to make sure the bill provides the online protections that all young people

deserve,” Fairplay Executive Director Josh Golin stated.

The ad industry self-regulatory group Network Advertising Initiative

separately praised the committee for advancing the teens' privacy bill, stating that members of the organization don't want to harness minor's data for targeted advertising.

“The

legislation would also preserve the ability for child-directed sites and services to utilize contextual advertising, which is essential for monetizing free and low cost content for children,”

Leigh Freund, the group's president and CEO stated.

The tech industry organization NetChoice had urged the committee to reject both bills, stating they are “false promises to American

families.”

“Parents need laws that are meaningful and constitutional, but [Kids Online Safety Act] is neither,” Amy Bos, director of state and federal affairs at NetChoice,

stated.

Judges have invalidated other state laws that aimed to regulate how social media platforms display content to minors.

For instance, last month a federal appellate court blocked key portions of a California law that would have required online

companies likely to be accessed by users under 18 to evaluate whether the design of their services could expose minors to “potentially harmful” content, and to mitigate that potential

harm.

A three-judge panel of the 9th Circuit Court of Appeals ruled in that case that those provisions likely violated the First Amendment's prohibition on censorship.