Aquila, the Association of National

Advertisers' (ANA) cross-media measurement subsidiary, has struck a deal with audience-measurement firm Samba TV to provide streaming viewership data as part of Aquila's process of deduplicating

audience reach across TV, CTV and online streaming platforms.

Samba, which derives its first-party viewing data for ACR (automatic content recognition) systems, follows deals that Aquila

previously struck with Comscore to provide linear TV viewing data, and with Kantar Media to manage the calibration panel that is at the heart of the reach

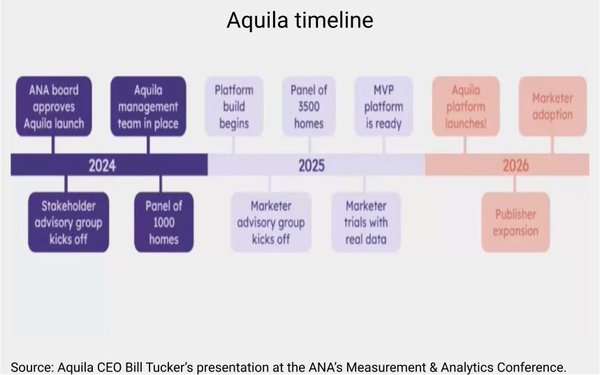

deduplication process. That panel already is installed in more than 3,500 U.S. households, with a goal of getting to 5,000 by the first quarter of 2026.

advertisement

advertisement

During the ANA's recent measurement and analytics conference in September, Aquila CEO Bill Tucker noted that nearly 30 ANA member advertisers have already

committed matching funding and have begun trial testing of the data, and that it is on schedule to launch in early 2026.

Aquila estimates the audience reach deduplication component will

improve the efficiency of big advertisers' media buys by 10% and will yield $50 million in improved productivity over the first three years.

Aquila and Samba described the streaming data

integration as a "multi-phase approach designed for precision and scalability," and said the first phase focusing on "data ingestion and integration" begins this quarter.

"The full solution

will provide live, campaign-level measurement capabilities and is expected to be released in the second half of 2026," they added.