Google is integrating AI and Gemini into core mobile

experiences that are customized for people who are vision- and hearing-impaired. The announcement was made Thursday, one day prior to Global Accessibility Awareness

Day (GAAD).

Gemini's TalkBack capabilities came to Android's screen reader last year to provide people who are blind or have low vision, but today the company expanded Gemini

allowing people to ask questions and get responses about the images.

The next time a friend texts a photo of their new guitar, the user can get a description and ask follow-up questions about

the make and color, or even what else is in the image.

The feature should come in handy even for advertisers and marketers because consumers will have the ability to ask questions about the

images and hear more about what is shown on the screen when they cannot see them.

advertisement

advertisement

In a blog post, Google explained that people can get descriptions and ask questions about what is on their

entire screen. If someone is shopping for the latest sales on in a shopping app, they can ask Gemini about the material of an item or if a discount is available.

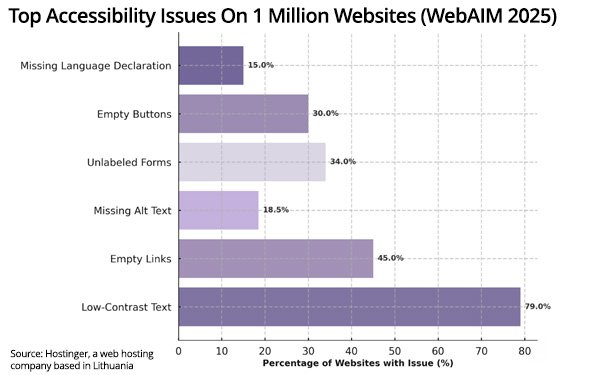

Between $8 trillion and $13

trillion in disposable income is controlled by people with disabilities and their families, according to Hostinger, a web hosting company that shared data which estimates 94.8% of websites have

at least one accessibility issue, with an average of 51 errors per home page.

These errors include common but critical problems including low-contrast text, missing alt descriptions, and

unlabeled buttons -- barriers that affect 1.3 billion people globally living with disabilities.

Six common issues make up 96% of all reported problems. Seventy-nine percent of pages have

low-contrast text -- the most common issue, and 45% of pages have empty links that confuse screen readers.

Making it easier for consumers to see, Hostinger suggested companies use

high-contrast text that stands out clearly, add alt text to all images so screen readers can describe them, label all forms properly so assistive technologies can identify them, and make sure buttons

have clear labels -- not just icons or colo

The company also suggests that links have descriptive text so users know where they lead, and declare the primary language of the webpage to help

screen readers.

Google also introduced Expressive Captions on Android phones for anything with sound across most apps. It created this feature using AI to not only capture what someone says,

but how they say it, so the person can hear the inflections in the meaning of the sentences.

The features allowed users to hear the difference between when someone says “no”

or “nooooo.”

The user also receives even more labels for sounds, so they know when someone is whistling or clearing their throat.

This new version is rolling out in English

in the U.S., the U.K., Canada and Australia for devices running Android version 15 and above.

About 2 billion people use Chrome daily. Google said it's trying to make its browser easier

to use and more accessible with features like Live

Caption and image descriptions for screen reader users.

Optical

Character Recognition (OCR) lets Chrome automatically recognize these types of PDFs, so users can highlight, copy and search for text like any other page and use your screen reader to read them.

Page Zoom, another feature, now lets you increase the size of the text you see in Chrome on Android without affecting the webpage layout or browsing experiences similar to how it works on the

Chrome desktop.

Users can customize how much deep someone wants to get and apply the preference to all pages visited or just specific ones.