Most people don't know when they are looking

at AI-generated content -- although Google, Microsoft and many others are working to resolve that challenge when it comes to images.

For years, people instinctively trusted that what they saw

was real, including online. But movies built on special effects showed the world the difference.

For example, movies explored what-if Mars landings in "Capricorn One" or moonwalks in "Fly Me

to the Moon."

The movies tried to say, what if these events were fake -- could cinematography really suggest these real-life events did not happen?

While movies explored the notion

that what is fake often looks real, and what is real often looks fake, artificial intelligence (AI) is taking the advertising and marketing industries into the unknown.

Neil Patel, founder of

NP Digital, specifically called out social media in a post on LinkedIn, but it will become more of a reality across the internet.

advertisement

advertisement

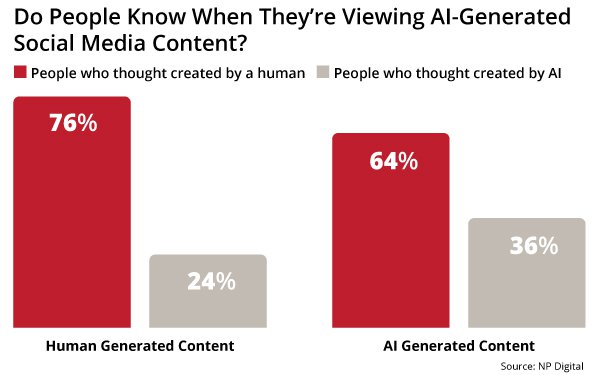

Patel and NP digital researchers worked with 100 people

to show them AI and human-generated content. Participants were asked to determine whether it was created by a human or AI.

For human-generated content, 76% thought humans created the

content, and 24% thought AI created the content.

For AI-generated content, 64% thought the AI-generated content was created by humans, and the remainder thought it was created by AI.

There is no turning back. Global AI funding reached a record $225.8 billion in 2025, propelled by massive deals, according to CB Insights.

Funding deals -- with rounds worth between $100

million and more -- accounted for 79% of total funding, reflecting the high costs of AI development.

Large language model (LLM) developers alone captured $93.1 billion or 41% of total funding,

with OpenAI, Anthropic, and xAI raising a combined $86.3 billion in 2025.

Microsoft ran a study similar to NP Digital's last year, but on a larger scale. More than 287,000 guesses about images

revealed just how easily AI visuals fooled humans.

The "Real or Not Quiz” examined how effectively people could distinguish AI-generated images from real ones.

Participants

viewed a random set of real and AI-generated images. Analysis of about 287,000 images evaluated by more than 12,500 global participants revealed a success rate of only 62% -- and that was using last

year’s technology.

At the time, the study also found that AI detection tools were more reliable than humans at identifying AI images, but automation can make mistakes.

Google

last year introduced SynthID to identify AI-generated images, audio, and videos made with Google's AI models such as Gemini, Imagen, and Veo by scanning for watermarks.

SynthID has

limited reliability with other platforms, and does not prevent content misuse. It only makes detection possible after the fact.

Technology companies and the advertising industry will need

something more.

"It won’t be long before we no longer believe anything we see online," Able Maung, founder at Zoomnets, which optimizes landing pages, wrote on LinkedIn.

Not being able to tell whether an image was created by AI is not the only challenge that marketers and brands have.

Advertisers may see AI's core conflict as an inability of consumers to

distinguish AI from human content. But as a result, there are many shifts in the marketing industry that will unfold in the next few months.

For AI Search platforms to be trusted, they

must use what Glenn Gabe, president of G-Squared Interactive, calls "grounding" to ensure answers are factual, believable, and current.

The large language model cannot rely on predictive text

from data training. Neither can brands and marketers.

"Grounding via Search can have a huge impact for AI Search platforms," Gabe wrote in a LinkedIn post. He provided an example

of ChatGPT providing a mostly incorrect answer when it does not use web search to ground the response, something that Google has been doing.

"Then when I selected web search, the answer

was correct," Gabe wrote. The question was related to favicons. "Again, why grounding via Search is super

important for AI Search platforms.

With bad comes with good. Anthropic released "Claude Cowork" last week. It is designed for non-developers to interact with Claude in a regular

conversation for coding.

Now the audience has grown. Cowork takes prerequisites, makes a plan, and then executes on it such as providing information on high-intent advertising leads.

Cowork can access local files on a marketer's computer such as a paid ad plan or newsletter strategy. There is also

a Chrome extension that allows it to browse websites on the user's behalf to provide feedback on the site's copy.

Claude's web audience more than doubled in December from the previous

year. Its daily unique visitors on desktop are up 12% globally year-to-date compared with last month, according to The Wall Street Journal, which cited data from market intelligence

companies Similarweb and Sensor Tower, respectively.

Malte Ubl, chief technology officer at Vercel -- which

helps develop and host websites and apps for users of Claude Code and other such tools -- told the WSJ he used the tool to finish a complex project in a week that would have taken him about a year

without AI.

Ubl spent 10 hours a day during his vacation building new software. He told the WSJ that "each run gave him an endorphin rush akin to playing a Vegas slot

machine.