Google announced upgrades to its Gemini 2.5

Pro AI model at its annual I/O conference on Tuesday around thinking, reasoning and budgeting.

The features set the tone for the company’s strategic direction this year, providing

updates that will make its suite of consumer-facing products smarter as it builds out more advanced features to sell to businesses, and creators based on subscriptions.

Deep Think allows the

model to consider multiple answers to questions before responding, improving performance based on specific benchmarks.

Thinking Budget -- available for Gemini 2.5 Pro from Google DeepMind --

will allow developers to control the amount of computational resources and cost that Gemini 2.5 Flash uses for reasoning before generating a response.

Developers can tell the AI how deeply it

should "think" about a problem before answering. A higher budget allows for more complex reasoning and potentially higher-quality output, but also increases the cost.

advertisement

advertisement

The same models

that power AI Mode will now power AI Overviews for Search, which calls on custom AI technology to pull together a response that might need more help.

Soon AI Mode will personalize suggestions

based on past suggestions. Google calls this personal contact. Since flight and hotel confirmations are in the user's Gmail inbox, it will have the ability to support those who travel with more

information.

Deep Search in AI Mode will comb through thousands of pieces of information, similar to ChatGPT's Deep Research. It will scan multiple sites simultaneously and conduct

automated follow-up searches in response to its findings to produce heavily cited report in minutes.

AI Mode also will become agentic. Users will be able to assign tasks for

the platform to complete on its own by navigating the web and actioning autonomously. For example, users can book haircuts, make restaurant reservations, and more.

The

feature will also have the ability to produce data visualizations, with the capability to analyze user data and set insights into graphics for specific user queries, beginning with finance and

sports.

Shopping capabilities also come to AI Mode using the company's Shopping Graph, which includes more than 50 billion product listings globally.

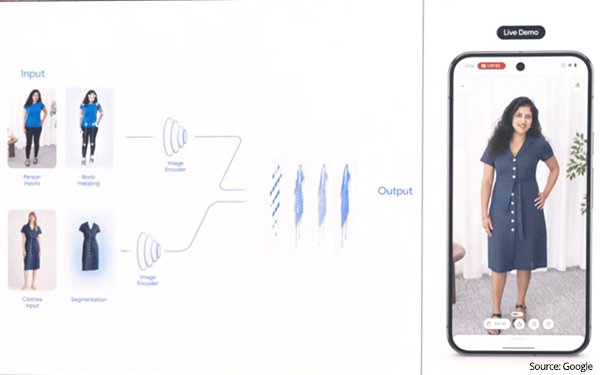

Google has

created a tool that allows consumers to find clothing and try on the product virtually. Rather than see the piece of clothing on what a model might look like, the test feature lets the consumer

upload a photo of themselves to see what it looks like on them.

The shopping feature is rolling out in Search Labs in the U.S. today through the “try it on” button.

“We are in a new phase for AI,” Google CEO Sundar Pichai said during his opening remarks in a presentation that highlighted features such as Google Beam, an AI video model that

transforms 3D streams into a new experience.

The Beam feature uses multiple cameras to capture different angles to render 2D video streams into 3D visuals that aim to provide a more natural,

immersive conversational experience, along with an instant conversational transcription feature for video on Google Meet.

The demonstration used an example of a person speaking with a vacation

rental owner in South America, showing real-time live translation between English and Spanish. More languages will roll out in the coming weeks.